RAG evaluation series: validating the RAG performance of OpenAI vs LlamaIndex

Updated 1/29/24: Since the publication of this article, we have released a new version of the tvalmetrics sdk called tonic-validate. The new SDK includes several improvements to make it easier to use. Due to the release of the new SDK and the discontinuation of tvalmetrics, certain elements within this article are no longer up to date. To see details about the new SDK, you can visit our Github page here.

This is the second of a multi-part series I am doing to evaluate various RAG systems using Tonic Validate, a RAG evaluation and benchmarking platform, and the open source tool tvalmetrics. All the code and data used in this article is available here. I’ll be back shortly with another comparison of more RAG tools!

Introduction

Last week, we tested out OpenAI’s Assistants API and discovered there are some major problems with its ability to handle multiple documents. However, to better assess its performance, I am going to compare OpenAI’s Assistants RAG to another popular open source RAG library, LlamaIndex. Let’s get started!

Testing OpenAI’s Assistants RAG

In the previous article, we set up OpenAI’s Assistants RAG already. You can view the original setup here. Here’s a refresher:

Our testing set utilized 212 Paul Graham essays. Initially, we tried to upload all 212 essays to the RAG system, but discovered that OpenAI’s RAG caps uploads to only 20 documents at most. To get around this, we took the 212 essays, split them into five groups, and created a single file for each group, giving us a total of five files. We used five files instead of 20 because we ran into reliability issues at higher document counts that prevented us from running any tests on a 20-file set. With the five-file setup, we got the following results using our open source RAG benchmarking library, tvalmetrics:

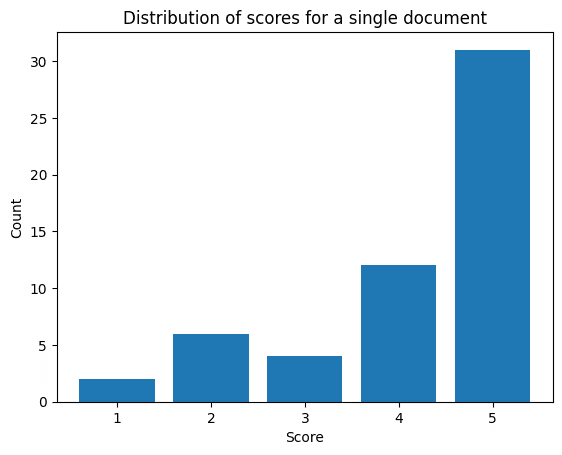

In our package, a score of 0 indicates no similarity and 5 indicates perfect similarity. The results above are less than desirable given the mean similarity score of 2.41 and median of 2. This low score is caused by OpenAI’s RAG system’s inability to find the relevant text in the document, resulting in the system returning no answer.

However, we then tried combining all 212 essays into a single document and the performance dramatically improved.

Not only did the mean similarity score jump to 4.16, but the median went up to a perfect 5.0. The speed of response also dramatically improved. Instead of taking almost an hour or more, the test was able to run in minutes. This shows that OpenAI’s RAG system is capable of good scores under specific working conditions, such as working on single documents. While we did achieve a high multi-document score once, this was a fleeting success as we weren’t able to replicate the result again.

Testing LlamaIndex

Now that we’ve recapped the OpenAI results, let’s assess LlamaIndex.

Preparing the experiment

To keep things fair for OpenAI, we will still use the Assistants API with GPT-4 Turbo in case there are any differences in performance in the Assistants API vs the regular API. However, I will turn off file retrieval, forcing the Assistants API to rely on LlamaIndex instead. I will also use the same experimental conditions I used for my OpenAI evaluation. First, I will test the tool using five combined documents to get a baseline for how LlamaIndex performs with multiple documents, then repeat the test using a single combined document. To set up LlamaIndex for this test, I ran the following code:

To ingest the documents, I ran this code:

To see how I created the single- and multiple-document training sets and the question-answer pairs used as the test set, you can take a look at my write-up in the last blog post, here.

Spot checking the setup

Finally, we can do a spot check to see how LlamaIndex performs.

For both the five-document setup and the single-document setup, the LLM returned the same correct response:

- Airbnb's monthly financial goal to achieve ramen profitability during their time at Y Combinator was $4000 a month.

Unlike OpenAI’s system, LlamaIndex was able to respond correctly to my query while using multiple documents and the answer stayed consistent each time I asked the question. Notably, when using a single document, the performance was about the same between the two. OpenAI’s answer is a bit more descriptive, but it also still hallucinates the source count. So, I would lean more towards saying I prefer LlamaIndex myself.

Evaluating the RAG system

Using the five-document setup, I got the following results:

Using multiple documents, LlamaIndex performed much better than OpenAI. Its mean similarity score was ~3.8, which is slightly above average, and its median was 5.0, which is excellent. The run time was only seven minutes for the five documents compared with almost an hour for OpenAI’s system using the same setup. I also noticed that the LlamaIndex system was dramatically less prone to crashing compared with OpenAI's system, which indicates that the RAG system itself was the problem for OpenAI's reliability as opposed to the Assistants API.

However, for the single-document setup, LlamaIndex performs slightly worse than OpenAI’s RAG system, with a mean of similarity score of 3.7 (vs OpenAI’s 4.16) and median of 4.0 (vs 5.0).

I should note that I got slightly better results by tweaking some of the LlamaIndex parameters, including changing the chunk size to 80 tokens, the chunk overlap to 60 tokens, and the number of provided chunks to 12, and by using the hybrid search option in LlamaIndex. Doing so yielded results that were closer to OpenAI, but not quite as good:

While the performance between the two systems is now closer, OpenAI still edges out ahead on single documents only. Keep in mind that the settings I used are tuned towards the type of questions I am asking (ie, short questions with an answer that’s very obvious in the text). These settings won’t work in all scenarios, whereas OpenAI managed to achieve good performance with settings that should, in theory, work in any situation. Although, because OpenAI doesn’t allow for a lot of customization of their settings, you could be leaving performance on the table. In the end, it’s up to you whether you want more customization with the ability to get better results in certain scenarios or a general out-of-the-box tool that can achieve decent performance (...on single documents only, that is).

Conclusion

OpenAI's RAG system does seem promising, but performance issues with multiple documents significantly decreases its usefulness. Their system can function well on single documents. However, most people will probably want to operate their RAG system on a corpus of different documents. While they can stuff all their documents into a single, monolithic document, that’s a hack and shouldn’t be required for a well-functioning, approachable RAG system. That, coupled with the 20-file limit, makes me hesitant to recommend anyone replace their existing RAG pipeline with OpenAI's RAG anytime soon. However, as I’ve said before, there is potential for improvement for OpenAI. While running some spot checks on their RAG system for GPTs, I noticed that the performance was much better on multiple documents. The bad performance is solely limited to the Assistants API itself. If OpenAI worked to bring the Assistants API quality up to that of the GPTs and removed the file limit, then I could see companies considering migrating from LlamaIndex if they are willing to give up some of the customizability that LlamaIndex provides. However, until that day comes, I recommend using LlamaIndex.

All the code and data used in this article is available here. I’m curious to hear your take on OpenAI Assistants, GPTs, and tvalmetrics! Reach out to me at ethanp@tonic.ai.

.svg)

.svg)

.svg)

.avif)