Delphix vs Tonic.ai: Which is the better choice?

Tonic.ai’s modern test data platform accelerates engineering velocity by offering cross-database subsetting, cloud database support, and complex data handling.

.webp)

Why choose Tonic.ai over Delphix

Optimized ease of use

With its modern UI, full API, and native database connectors, Tonic.ai provides rapid time-to-value through streamlined workflows built for today’s developers. Onboarding is far easier with Tonic.ai than with Delphix, enabling teams to get the data they need in days rather than months.

Better performance at scale

While Delphix runs into performance issues when processing large datasets, Tonic.ai is architected to match the scale and speed of data warehouses like Snowflake and Databricks. Tonic users regularly process PBs of data using complex de-identification configurations.

Higher quality output data

Tonic.ai’s focus has always been on making data useful, not just masked. With capabilities like cross-database consistency, column linking, and complex generators for JSON and regex data, the platform maintains the underlying business logic in your test data so your test suites work.

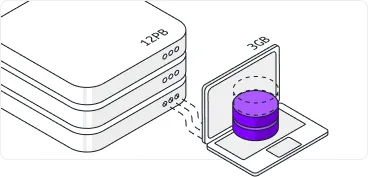

Subsetting with referential integrity

Tonic.ai’s patented subsetter works across your database, not just one table at a time, to shrink PBs down to GBs while preserving referential integrity across tables. Create targeted datasets using custom WHERE clauses or simple percentages to pull just the data you need.

Learn why teams are switching to Tonic.ai.

The Delphix alternative that customers love

Native and performant cloud integrations

From relational databases to data warehouses to NoSQL, Tonic.ai’s native data source connectors provide customers with the coverage and reliability they need to connect to their data without fickle workarounds.

Support for complex data at scale

Tonic.ai’s robust support for complex data types along with its consistent masking capabilities enable users to de-identify data at scale without breaking the data’s underlying business logic.

Consistency and referential integrity

Maintaining referential integrity within your test data is essential for ensuring its utility, which is why Tonic.ai provides features like consistent input-to-output masking and virtual foreign keys to maintain relationships and business logic across tables and databases.

Intuitive UI

As a modern data platform built with today’s developers in mind, Tonic.ai’s UI is known for its ease of use and continuous updates, providing users with an optimized no-code user experience.

Tonic.ai features that outperform Delphix

Tonic.ai features that outperform Delphix

Support for cloud-based data

Tonic.ai is built to work with data warehouses and data in the cloud, offering native connectors to Snowflake, Databricks, Redshift, Big Query, and data in S3.

Cross-database consistency

Map the same input to the same output across an entire database or across multiple databases of varying types to maintain referential integrity.

Cross-database subsetting

Patented subsetter that works across your full database to shrink PBs down to GBs, pulling just the data you need.

Realistic synthetic data from scratch

Leverage the industry-leading AI agent for synthetic data generation to hydrate developer environments with complex, hyper-realistic data in minutes.

Native connectors for NoSQL

Tonic.ai natively supports MongoDB, DocumentDB, and DynamoDB, providing masking, subsetting, and synthesis for semi-structured data.

Complex data generators

De-identify JSON, regex, XML, and other complex data types with ease, maintaining consistency to preserve the business logic within your data.

Last updated December 2025. Comparison based on Delphix’s full suite of services.

.svg)

.svg)