LLM fine-tuning

The problem

In regulated industries like healthcare, fine-tuning Large Language Models (LLMs) on internal data is limited by strict rules around personally identifiable information (PII) and protected health information (PHI). Although this sensitive data is essential for improving model performance, regulatory and privacy risks make it difficult to use.

For example, suppose unstructured medical notes need to be fine-tuned into models that produce structured outputs following the HL7 FHIR standard, a vital task since most clinical data is unstructured. However, fine-tuning on this data risks HIPAA violations and the model memorizing and unintentionally revealing sensitive patient details. This is especially challenging because PHI like names and birthdates is necessary for the task but also highly regulated.

The solution

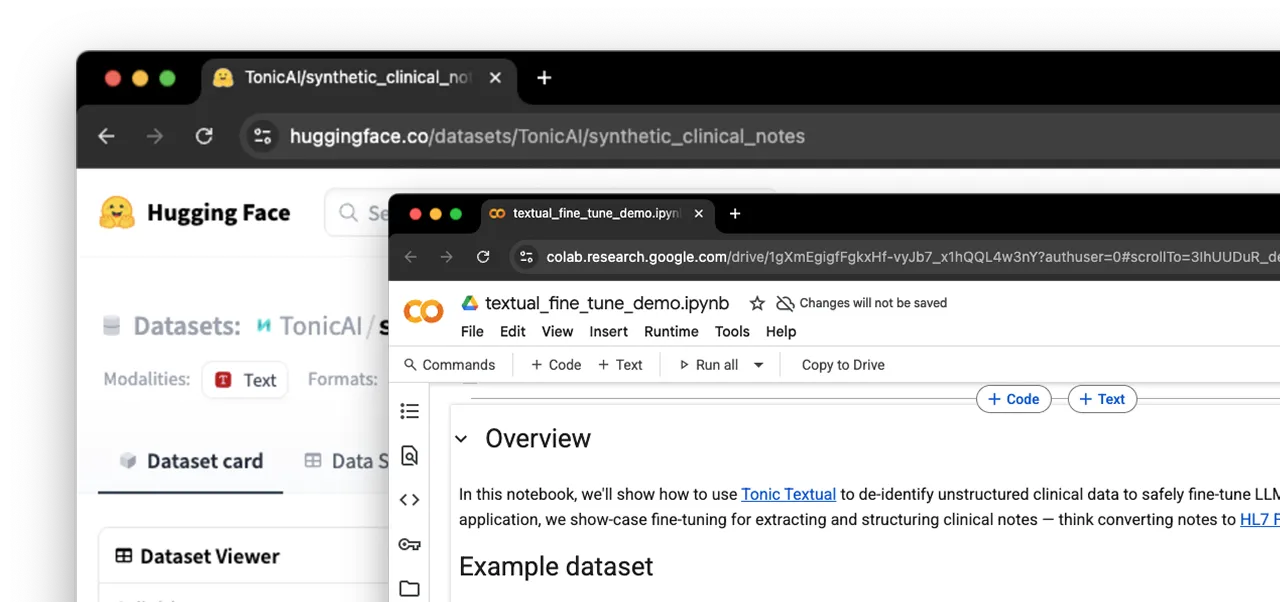

Tonic Textual addresses this challenge by enabling safe fine-tuning on sensitive data. In this scenario, Textual is used to transform unstructured medical notes by replacing sensitive entities with realistic synthetic substitutes. Tonic Textual leverages proprietary NER models to identify sensitive values at scale, enabling data teams to process large volumes of unstructured data across a variety of file types and formats.

This preserves the contextual utility of the data while mitigating the risk of memorization and regulatory violations, allowing for effective fine-tuning of an LLM that generates HL7 FHIR-like structured outputs—without compromising patient privacy.

Watch Ander Steele, Tonic.ai’s head of AI, leverage Textual to create a privacy-protected dataset from unstructured clinical notes, benchmark for performance, and then feed to a LLM.

Playbook steps

Load the data set

Use Textual to generate a safe version of your data by identifying and replacing sensitive information with realistic synthetic substitutes:

- Install the Textual SDK and create an API key

- Determine relevant entity types required to maintain compliance

Ingest the dataset into the LLM for fine-tuning

When possible, benchmark the performance of the model using real data. While real data should not be used as training data because of memorization risk, it can be used as test data to ensure the model is performing as expected

Assuming the model performs well on the test set, the model is safe to deploy in production

Try for yourself

Want to test it out? We’ve included all of the assets from the video in the playbook so that you can experiment on your own.

Built-in Intelligence for real-world data

Tonic.ai comes ready with out-of-the-box support for a rich library of entity types—so your data is understood from day one. From names, dates, and locations to nuanced healthcare, finance, and developer-specific fields, our pre-trained models are designed to recognize the structures and semantics that matter most. Whether you're redacting sensitive information or enriching records with entity-level precision, these built-in types form the backbone of smarter, safer data workflows.

Here's a look at the entities Tonic Textual can detect automatically:

.svg)

.svg)