Expert insights on synthetic data

The lastest

How to maximize HEDIS scores with synthetic data

Accessing PHI for development and testing is often blocked by stringent HIPAA compliance requirements. Learn how synthetic data helps engineers build tools to close care gaps and improve HEDIS scores.

Blog posts

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Data de-identification

Data privacy

Healthcare

Tonic Structural

Tonic Textual

Tonic Fabricate

Data privacy

Data de-identification

Tonic Fabricate

Tonic Structural

Tonic Textual

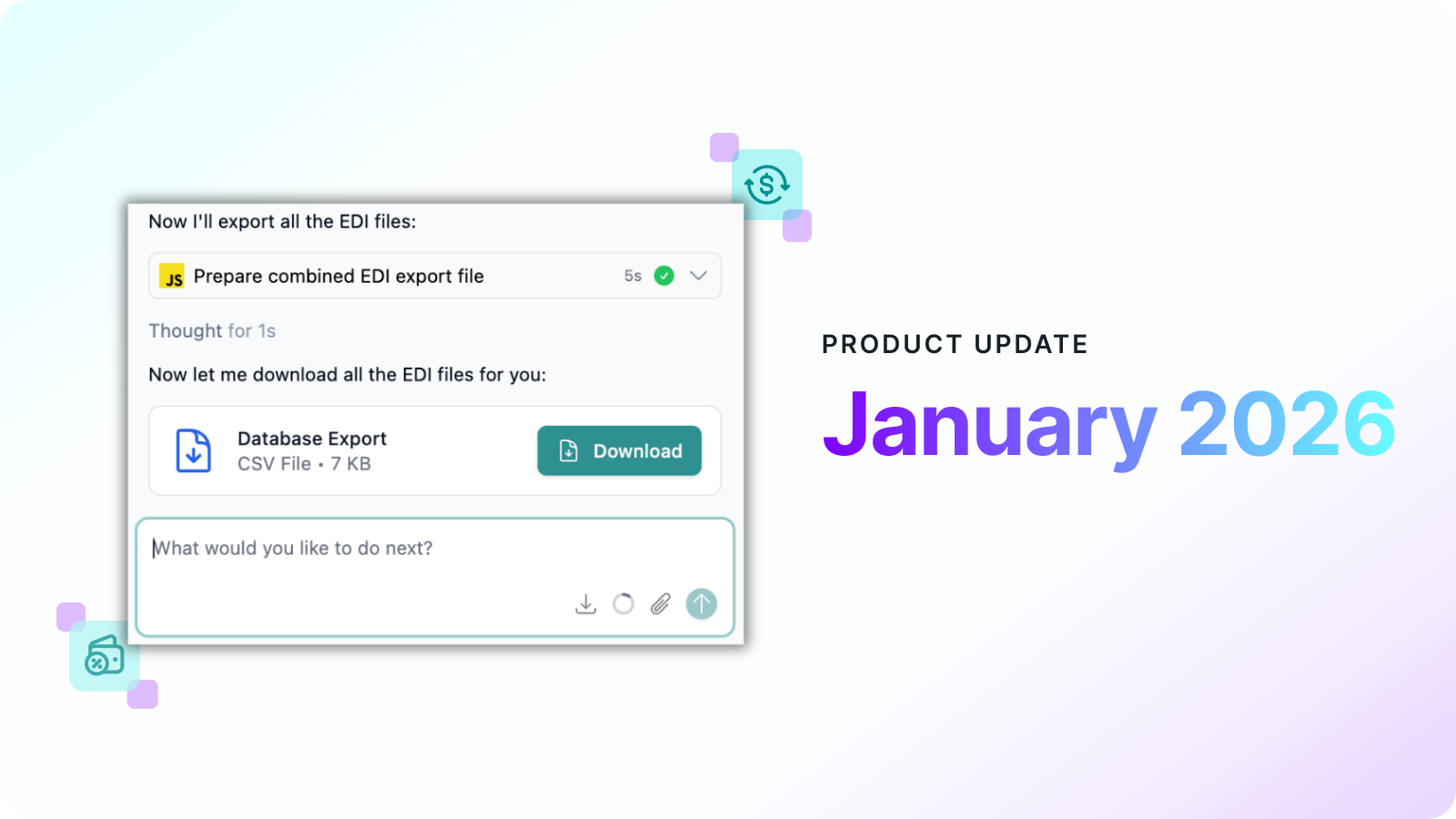

Product updates

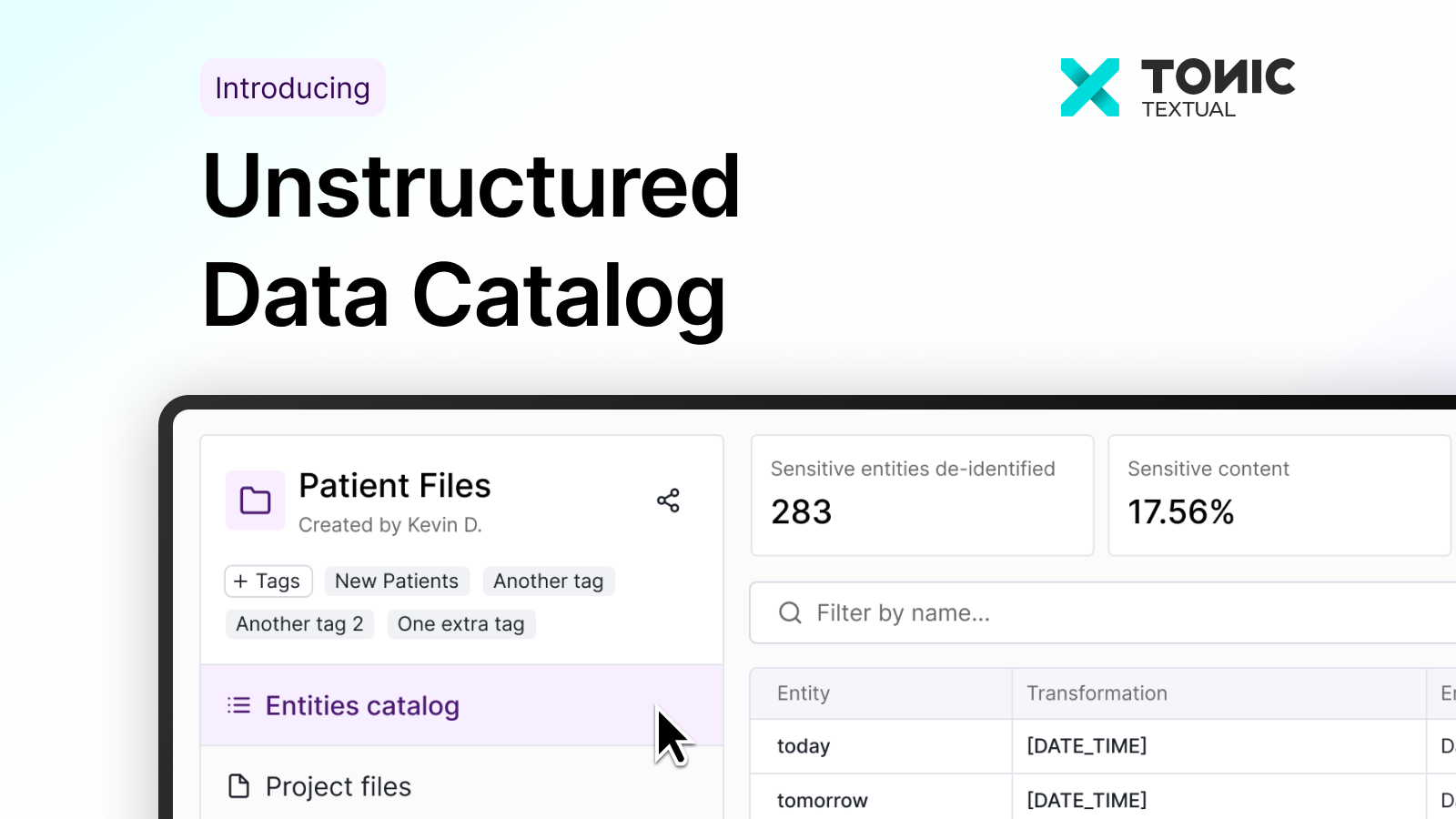

Tonic Textual

Data privacy

Data de-identification

Data synthesis

Tonic Structural

Tonic Fabricate

Tonic Textual

Data synthesis

Data privacy

Generative AI

Tonic Structural

Tonic Fabricate

Tonic Textual

Data privacy

Healthcare

Tonic.ai editorial

Tonic Textual

Product updates

Tonic.ai editorial

Tonic Fabricate

Tonic Structural

Tonic Textual

Data de-identification

Data privacy

Healthcare

Tonic Structural

Tonic Textual

Data de-identification

Test data management

Tonic Structural

Tonic Textual

Test data management

Data privacy

Tonic Structural

Tonic Textual

Tonic Fabricate

.svg)

.svg)

.svg)