How to generate realistic test data with Faker

New regulations around data privacy and an increasing awareness of the importance of protecting sensitive data are pushing companies to lock down access to their production data. Restricting access to high quality data with which to build and test leads to a variety of issues, including making it more difficult to find bugs. In this article we’ll look at a variety of ways to populate your dev/staging environments with high quality synthetic data that is similar to your production data.

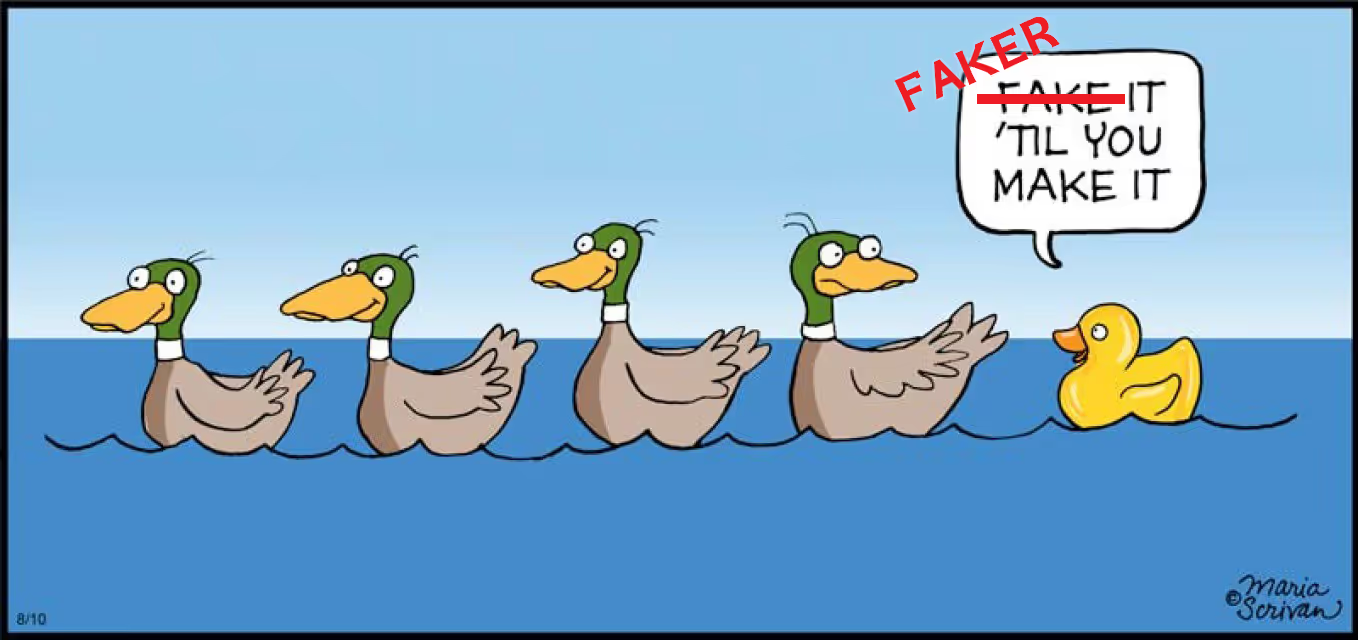

To accomplish this, we’ll use Faker, a popular python library for creating fake data.

What is Faker

Faker is a python package that generates fake data. It is available on GitHub, here. It is also available in a variety of other languages such as perl, ruby, and C#. This article, however, will focus entirely on the Python flavor of Faker.

Data source

We obviously won’t use real data in this article; we’ll use data that is already fake but we will pretend it is real. The data we will use is a table of employees at a fictitious company. Our goal will be to generate a new dataset, our synthetic dataset, that looks and feels just like the original data.

Our ‘production’ data has the following schema. As you can see, the table contains a variety of sensitive data including names, SSNs, birthdates, and salary information.

Getting started

Refer to the Faker documentation for more details on how to install Faker, but in short you can run:

Once installed, we can start masking individual columns. Normally, we’d do an analysis of the table to determine which columns are PII and which are not, but in this case, I’d like to be able to generate arbitrary amounts of data for this schema so I’ll need to create a generating function for each column. As a quick pass, let’s say we’d like to use the following faker providers on each column:

For the columns that don’t have an applicable provider, we’ll handle them ourselves leveraging python’s own random library. Note that as of this writing there is a known issue in Windows 10 that causes exceptions to occasionally be raised when using the Faker date_time provider.

Below, you can find a python snippet that contains a mapping from each column name to a python lambda function which will generate the columns’ value.

We can easily generate a new row of data by calling the following:

Or we can generate an arbitrary number of rows by throwing this call into a loop:

At this point, you could write this Faker data to a CSV and import it into the database of your choosing.

Drawbacks to what we just did

There are several drawbacks to what we just did. First, Faker was only able to help us with a little more than half of our columns. For the remainder, we had to write our own logic. That in itself is somewhat expected but certainly a negative. Second, the quality of the data we generated is poor for several reasons.

Poor data quality

Below are a few issues with our approach in the previous section that led to the poor quality of the Faker data.

- First names do not match the expected Gender. This is because we generate each column independently of the others.

- Birth Date and Start Date are generated independently of each other, which can result in a Start Date that occurs prior to a Birth Date which is not possible.

- Office, Title, Org are interrelated via a hierarchical structure. Here is some real data to show what I mean:

As you can see, certain cities only have certain Orgs and certain Orgs only have certain roles. Additionally, there are only 4 possible cities. In our approach, we generated random cities (as opposed to limiting it to 4 cities) and when we generated Title and Org we did so independently of each other and independently of city. This will result in impossible rows such as:

where we have generated a city that isn’t one of the 4 allowed cities and we’ve created an Engineer inside the Sales org which is also not possible.

- Salary and Bonus were generated independently of each other when in fact salary and bonus are strongly related. At most companies, the higher (or lower) your salary, the higher (or lower) your bonus. It would be better if we generated salary and bonus values with such a relationship.

- Salary and Bonus depend on title. In the real world, people with different titles make more or less depending on their title. For example, a VP should make more money than a Sales Associate. With our approach, we generated Salary and Bonus independently of Title, so in our test data people with different titles will all make the same, on average.

Conclusion

All of the above bullet points have several things in common. First, in any future attempt, we must take into account the relationships between columns. Currently, each column is generated independently of every other column. This isn’t feasible for generating high quality test data. Second, Faker is useful for generating some types of data but it is not useful for others.

A second attempt at generating test data

Let’s take our findings from the attempt and make a new test data set that has higher quality data. To accomplish this, we will address each of the bullet points in the previous section.

You’ll notice that some of these issues are easy to address and some are quite difficult. We will use Faker where possible.

Linking Columns

First, we must find a way to link columns together. We can do that by modifying our lambda expressions to accept arguments which it can use to better generate data.

Linking First name and Gender

Let’s create a function that returns a Name and Gender together.

This approach is much better, but what if the real data does not have equal numbers of men and women? We might want to weigh more towards Male or Female employees.

Linking Birth and Start Date

To ensure that start dates do not happen before birth dates we can link them together as such:

This will generate start dates some time in the past 20 years and ensure that birth dates are between 18 years and 40 years before that.

This will guarantee generating dates that are at least valid, however, it would be nice to show that VPs on average are at the company longer than Engineers or that the distribution of time between being born and starting at the company follows the real distribution (as opposed to the uniform distribution we’ve chosen).

Linking Office, Title, and Org

To ensure that the hierarchical structure of the data is preserved, we can define that structure in our code and generate valid triplets of (office,title,org).

This is much better. We are guaranteed to only choose logical triples, i.e. we won’t see any Engineers in the Sales organization. However, we are assuming that the distribution of employees in each office is uniform, that the distribution of VPs and Managers in the Product organization is uniform, etc. It would be better if we could look at the frequencies of each domain and create new distributions that follow those frequencies.

Linking Salary and Bonus together

Salary and Bonus have a strong positive correlation, i.e. the higher you’re paid the higher your bonus.

Let’s write some code to show that relationship in our Faker data.

This will generate salary and bonus information with a strong positive correlation. In fact, below is a scatter plot showing the relationship. The x-axis is salary and the y-axis is bonus.

Linking Title with Salary and Bonus information

Since we’ve already made new functions for both Title and Salary/Bonus, we will actually just combine them all together to make one generator that creates values for title, org, office, salary, and bonus.

Now, the range of salaries an employee can make (and hence their bonus) is dictated by their title. This is great but could be even further improved by taking into account which org you belong to and which city you live in.

Putting it all together

Let’s now tie everything together for our second attempt.

First, our new lambda assignments look like this. We’ve modified things to ensure that each lambda always returns a dictionary.

To handle this and end up with a single list per row, we could do something like:

In summary

As you can see, our second attempt generates much higher quality data by addressing each of the issues identified in our first attempt. We continue to make great use of Faker but have also written some of our own generators. Still, there’s much more that could be done!

The largest issue in our second attempt is the fact that we don’t make more use of our underlying data set. By collecting various statistics, metrics, and heuristics off of our underlying data set, we could do a lot to make our fake data look more real. It is a challenging problem, however, and can make people shy away due to the complexity and amount of statistics knowledge required.

If you’d like to see fully functioning code from either our first or our second attempt, you can find it on GitHub here and here.

And if you're looking for an off-the-shelf solution for generating realistic test data, sign up for free trials of Tonic Fabricate, our platform for generating synthetic relational databases from scratch, and Tonic Structural, our platform for de-identifying your production data for secure, realistic use in your lower environments. Once you've taken them for a spin, drop us a line to let us know what you think.

Adam Kamor, Co-founder and Head of Engineering at Tonic.ai, leads the development of synthetic data solutions that enable AI and development teams to unlock data safely, efficiently, and at scale. With a Ph.D. in Physics from Georgia Tech, Adam has dedicated his career to the intersection of data privacy, AI, and software engineering, having built developer tools, analytics platforms, and AI validation frameworks at companies such as Microsoft, Kabbage, and Tableau. He thrives on solving complex data challenges, transforming raw, unstructured enterprise data into high-quality fuel for AI & ML model training, to ultimately make life easier for developers, analysts, and AI teams.

.svg)

.svg)

.svg)