Expert insights on synthetic data

The lastest

A guide to data masking for HITRUST certification

Effective data protection to meet the stringent requirements of HITRUST certification is less onerous than you think. All it takes is the right combination of data transformation solutions.

Blog posts

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Data de-identification

Data privacy

Healthcare

Tonic Structural

Tonic Textual

Data de-identification

Test data management

Tonic Structural

Tonic Textual

Test data management

Data privacy

Tonic Structural

Tonic Textual

Tonic Fabricate

Data de-identification

Data privacy

Product updates

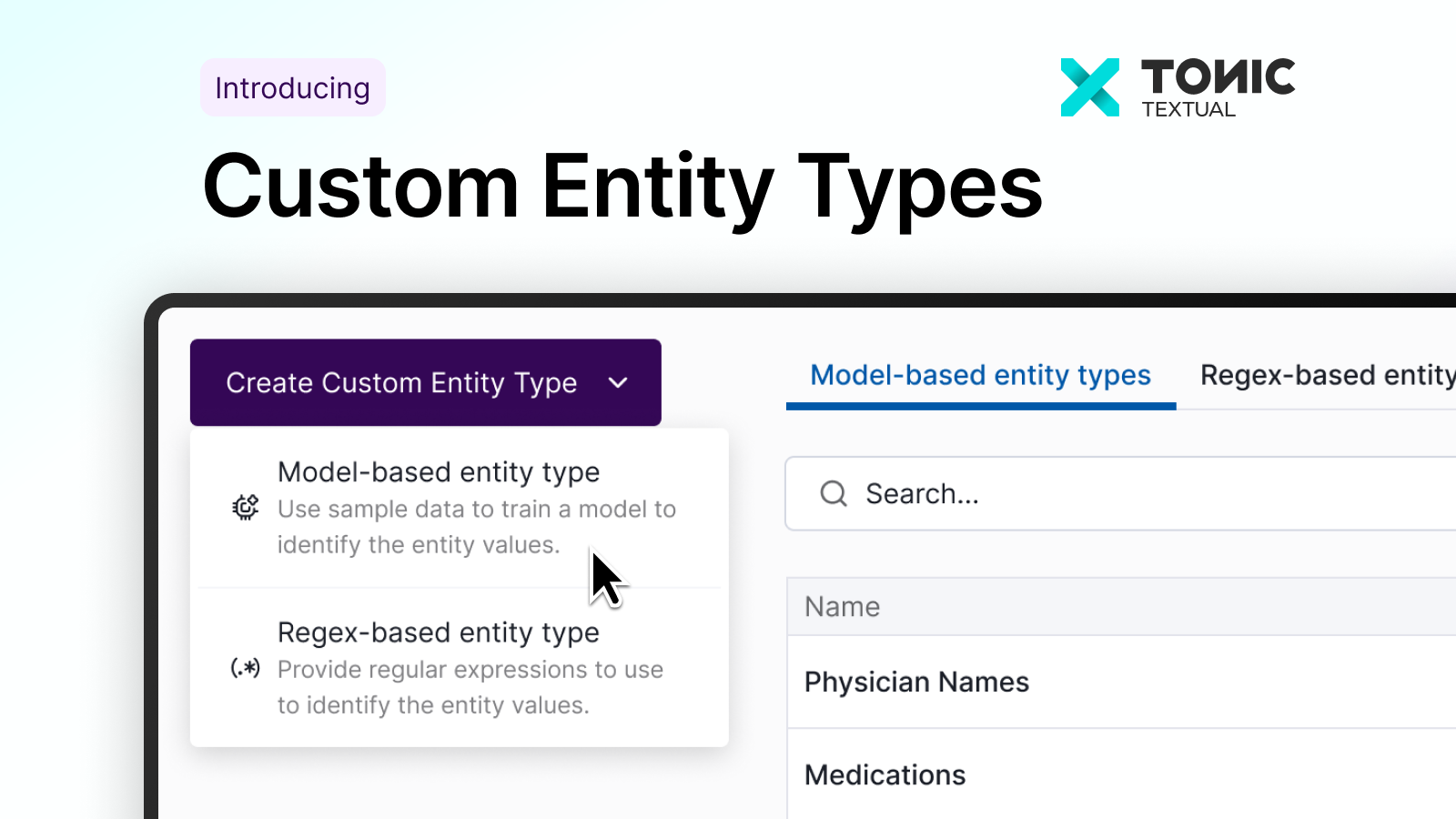

Tonic Textual

Hyper-realistic synthetic data via agentic AI has arrived. Meet the Fabricate Data Agent.

Product updates

Product updates

Generative AI

Tonic.ai editorial

Data synthesis

Tonic Fabricate

Product updates

Generative AI

Data privacy

Tonic Textual

Product updates

Tonic.ai editorial

Tonic Fabricate

Tonic Structural

Tonic Textual

Data privacy

Generative AI

Tonic Textual

Generative AI

Data privacy

Tonic Structural

Tonic Textual

Tonic Fabricate

.svg)

.svg)

.svg)