Expert insights on synthetic data

The lastest

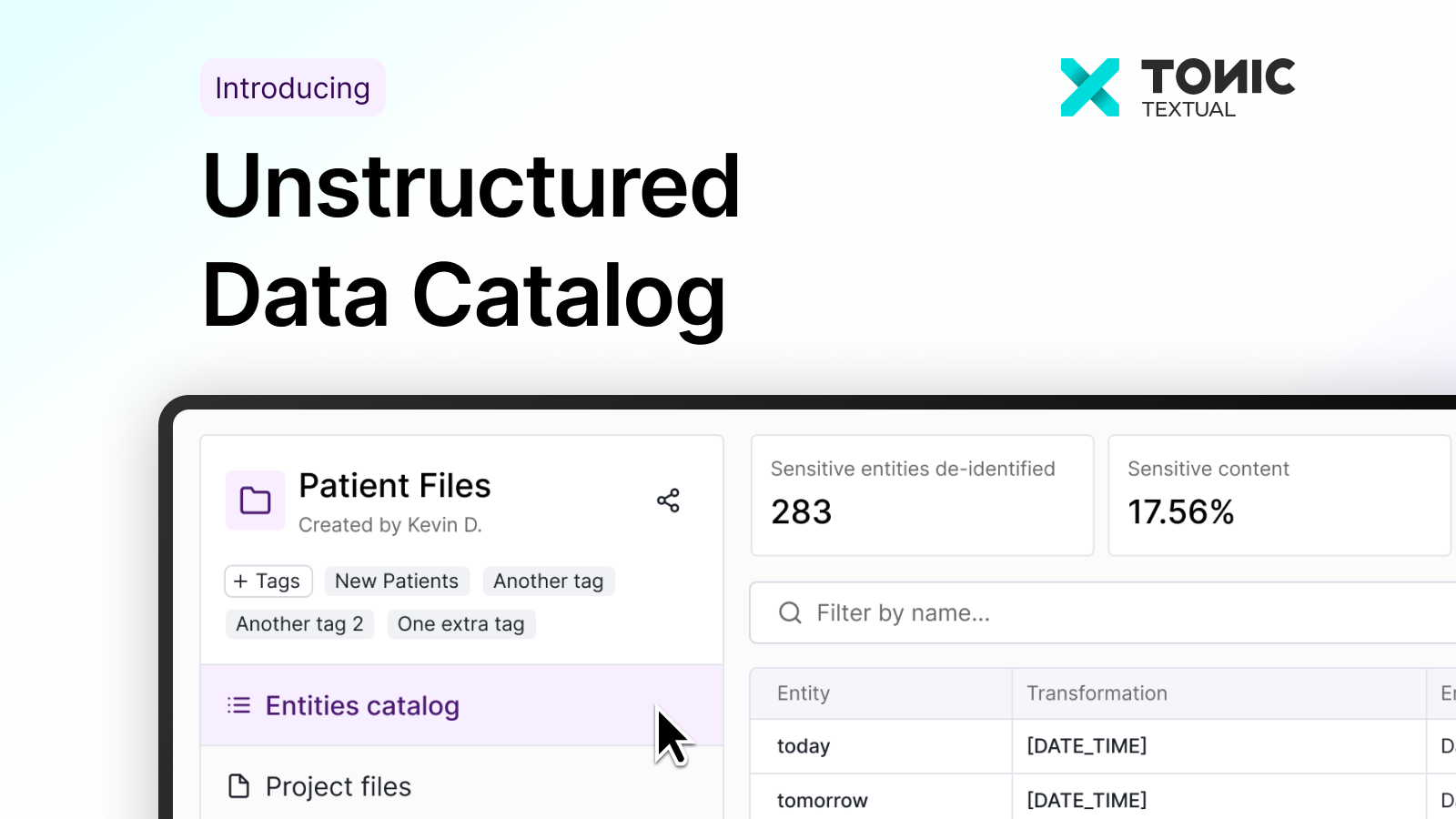

Introducing the Unstructured Data Catalog: From unknown text to usable data

The Unstructured Data Catalog gives teams visibility into sensitive text across documents so they can discover, govern, and safely use unstructured data for AI and analytics.

Blog posts

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Product updates

Tonic Textual

Data privacy

Data de-identification

Data synthesis

Tonic Structural

Tonic Fabricate

Tonic Textual

Data synthesis

Data privacy

Generative AI

Tonic Structural

Tonic Fabricate

Tonic Textual

Data privacy

Healthcare

Tonic.ai editorial

Tonic Textual

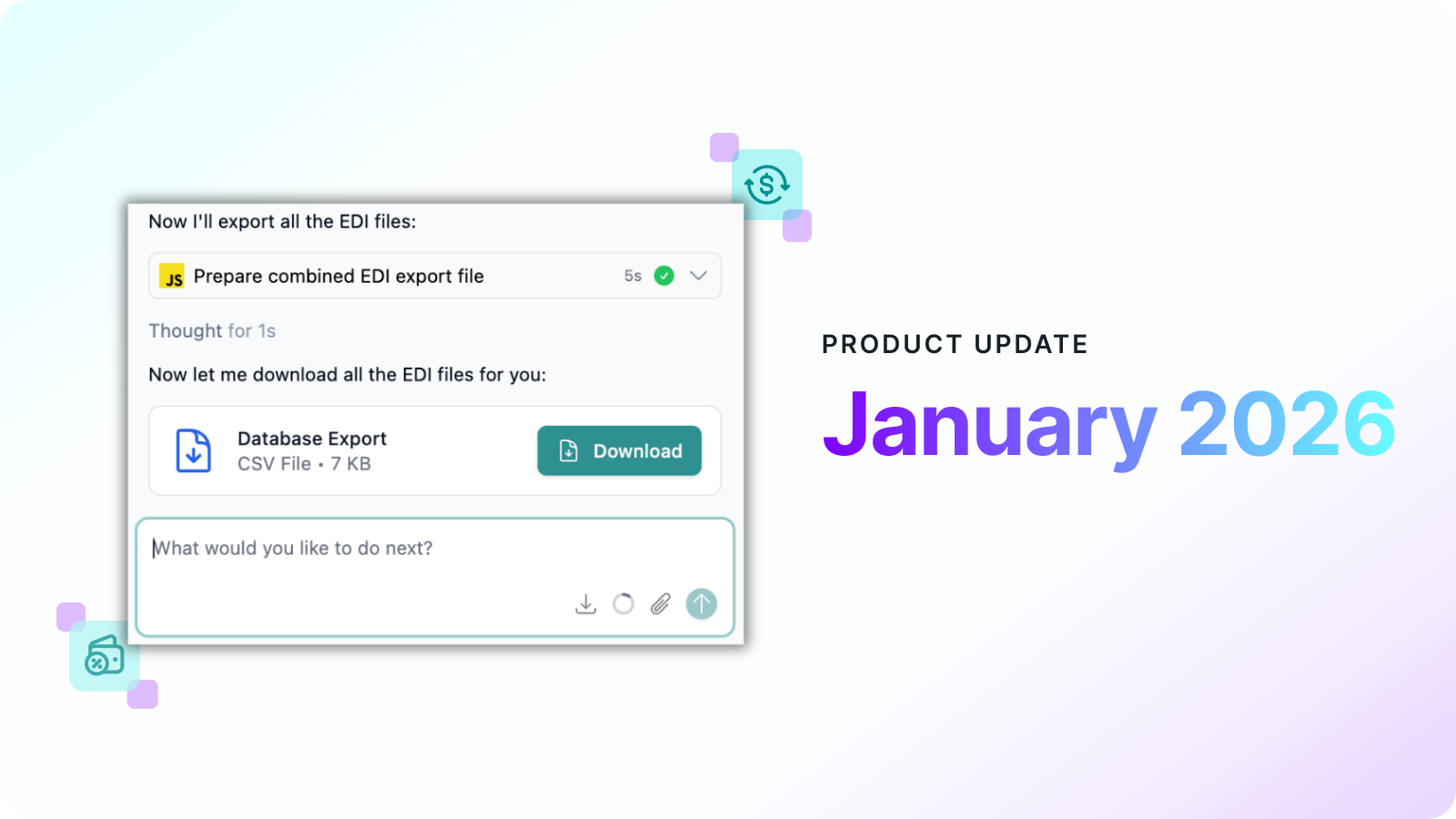

Product updates

Tonic.ai editorial

Tonic Fabricate

Tonic Structural

Tonic Textual

Data de-identification

Data privacy

Healthcare

Tonic Structural

Tonic Textual

Data de-identification

Test data management

Tonic Structural

Tonic Textual

Test data management

Data privacy

Tonic Structural

Tonic Textual

Tonic Fabricate

Data de-identification

Data privacy

Product updates

Tonic Textual

.svg)

.svg)

.svg)