Expert insights on synthetic data

The lastest

How to mitigate the risk of a data breach in non-production environments

Non-production environments are often overlooked when it comes to data security, but they can be just as vulnerable to breaches as production systems. Learn how to keep them protected.

Blog posts

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Data privacy

Data de-identification

Tonic Fabricate

Tonic Structural

Tonic Textual

Product updates

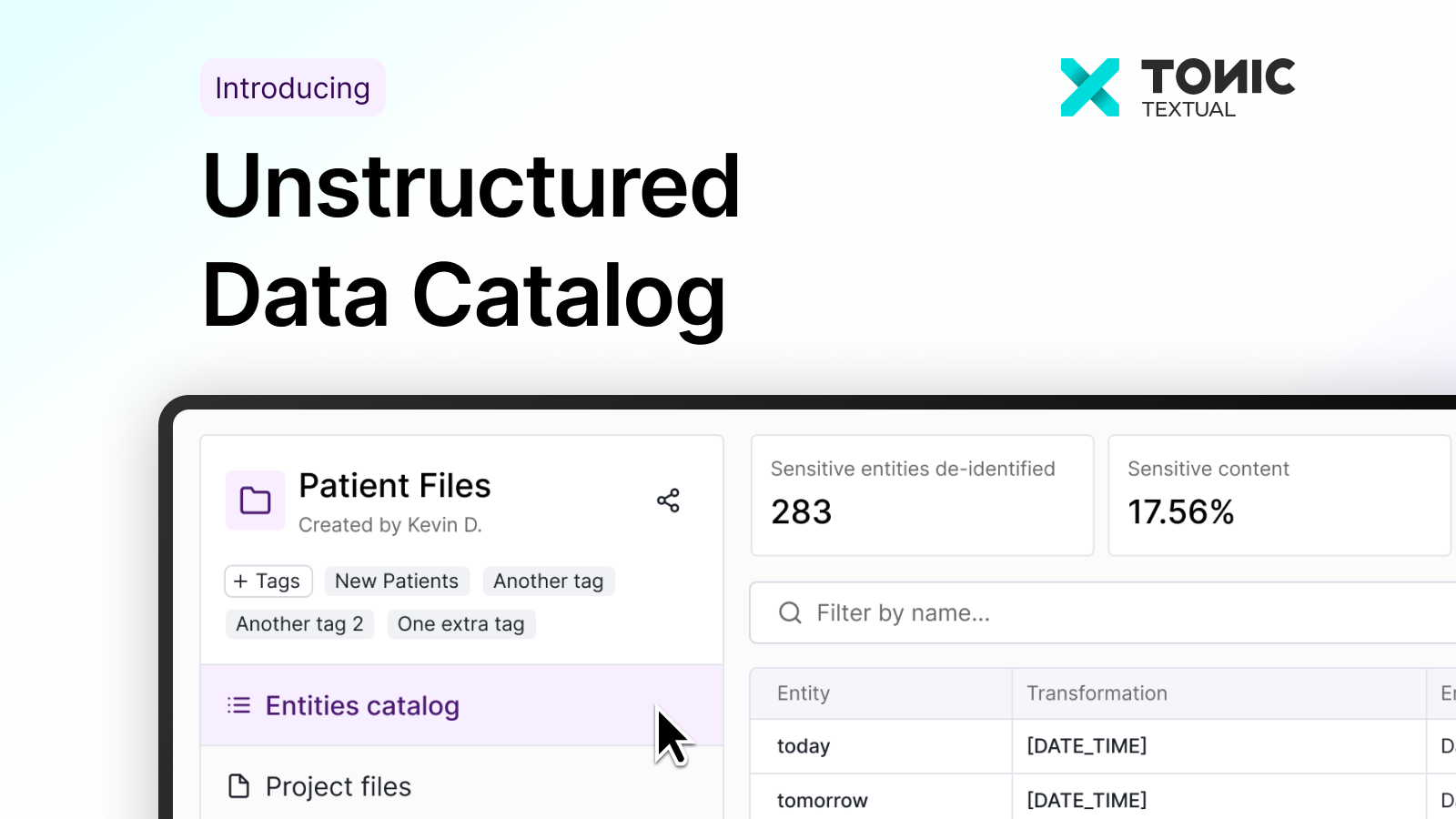

Tonic Textual

Data privacy

Data de-identification

Data synthesis

Tonic Structural

Tonic Fabricate

Tonic Textual

Data synthesis

Data privacy

Generative AI

Tonic Structural

Tonic Fabricate

Tonic Textual

Data privacy

Healthcare

Tonic.ai editorial

Tonic Textual

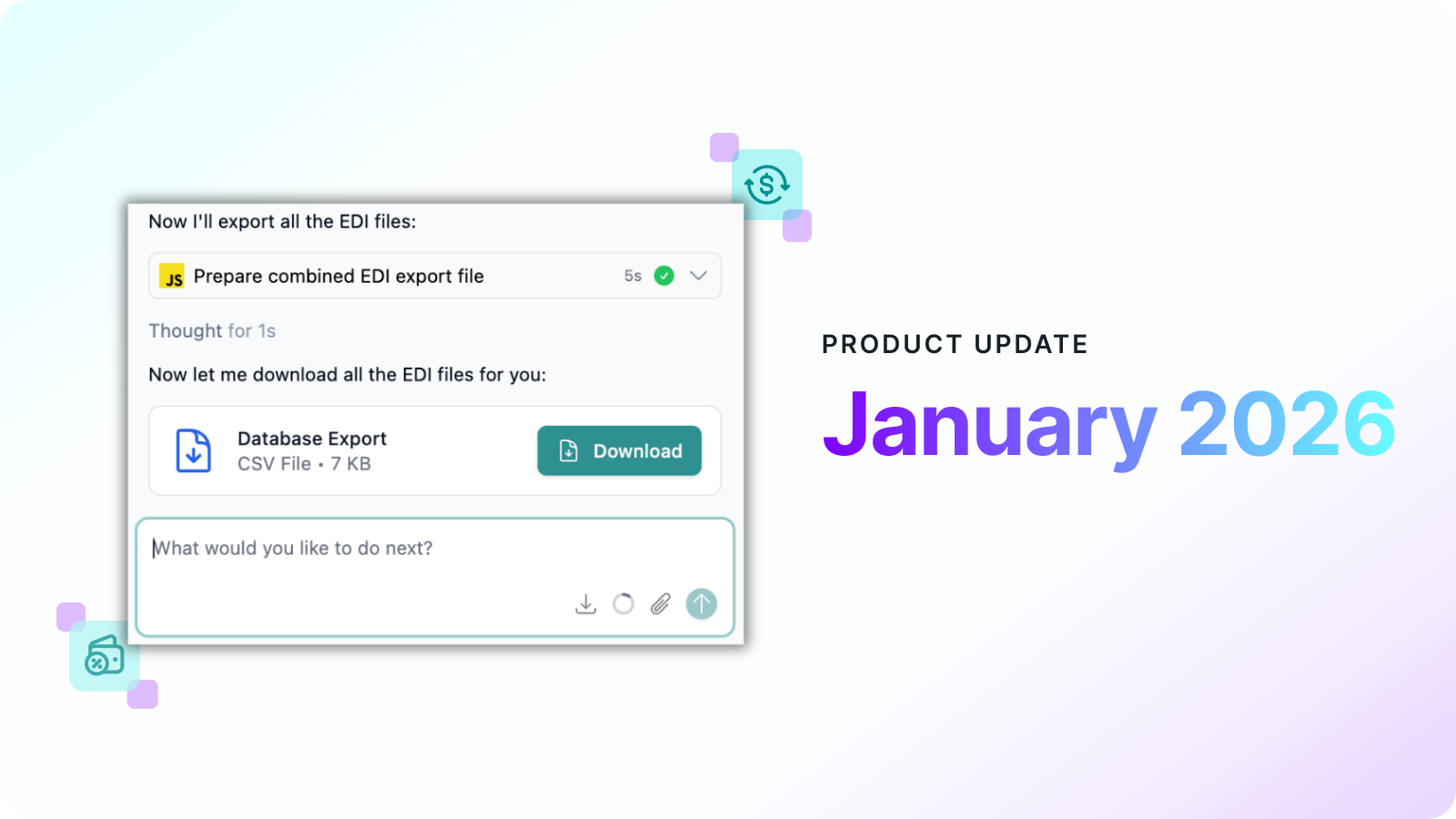

Product updates

Tonic.ai editorial

Tonic Fabricate

Tonic Structural

Tonic Textual

Data de-identification

Data privacy

Healthcare

Tonic Structural

Tonic Textual

Data de-identification

Test data management

Tonic Structural

Tonic Textual

Test data management

Data privacy

Tonic Structural

Tonic Textual

Tonic Fabricate

.svg)

.svg)

.svg)