Meet Tonic Datasets: Bespoke synthetic datasets for AI training and evaluation

If you’re building AI today, you already know the hard truth: models are only as good as the data you feed them. But the data you really want—rich, domain‑specific information lives behind company firewalls, simply doesn’t exist, or is expensive and cumbersome to acquire. How do you train a medical language model without patient records? How do you test an agent that needs to remember long conversations?

We’re launching Tonic Datasets because AI’s hunger for quality data is outpacing the availability of real world data. Public datasets are tapped out, and human generated data is slow and expensive. Tonic.ai has already helped enterprises access their enterprise data for technical workflows with Tonic Structural and Tonic Textual, which de‑identify and synthesize sensitive data, and Tonic Fabricate, which generates data from scratch. Now we’re opening that expertise to everyone: we can take a handful of seed records, a database schema, or even just a description of the dataset you need and build a high‑fidelity synthetic dataset for training, evaluating model performance, and domain specialization.

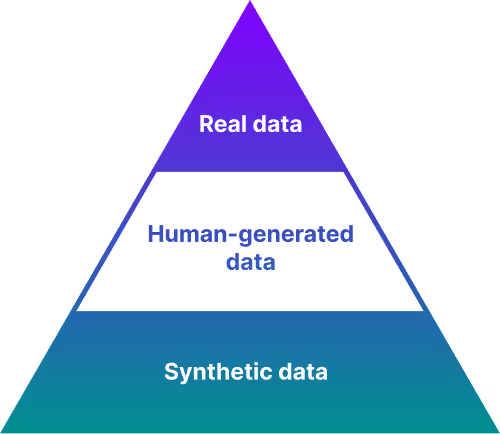

A layered data landscape

To understand why Tonic.ai stands apart, it helps to look at the data landscape as a pyramid. At the very top is real‑world data which is finite, expensive, and increasingly difficult to source. Most of human knowledge has already been consumed by LLMs, which makes enterprise data increasingly more desirable. However, there is only a limited amount of genuine production data that exists, and it comes with strict privacy and compliance constraints that make acquiring and using it challenging.

Beneath that layer sits human‑generated or human‑annotated data. This is where services‑focused firms like Handshake AI, Scale AI, and Turing operate. They use large teams to label and curate datasets, extending the supply of usable data but still at a high cost and with limited scalability.

Tonic.ai leads the market in synthetic data, the most scalable and flexible category. Our approach starts with our advanced technologies and expertise in data synthesis and synthetic research; nearly 20% of our R&D team holds PhDs in this field. We then add a services layer to ensure custom datasets meet specific use cases. This foundation enables us to rapidly generate high-fidelity synthetic data at a lower cost and at a scale unmatched by human-driven services alone.

Crucially, Tonic.ai can also partner with services‑based companies. Providers that specialise in human annotation can take data generation part of the way, and our synthetic data platform and tooling can complete the loop, scaling up volume, improving consistency, and ensuring privacy compliance. In doing so, Tonic.ai bridges the gap between finite real data, labor‑intensive human‑generated data, and infinitely scalable synthetic data.

What makes Tonic Datasets different

- Built on privacy‑first DNA. We aren’t scraping the web or hiring armies of labelers. We use the same synthesis engines trusted by our hundreds of healthcare, finance, and software customers to protect their most sensitive data, as well as new capabilities for best-in-class data generation.

- Flexible inputs. You can start with a schema, a small seed, or a full data spec, and we’ll generate the rest. Our models are tuned for specific industries and can produce structured records or free‑form text.

- Human‑in‑the‑loop. When the use case demands it, domain experts refine and validate the outputs to ensure the synthetic data feels authentic and fits the task.

- Ready to use. Need a place to run your tests? Ephemeral environments spin up isolated, fully hydrated databases with your synthetic data so you can plug them right into training pipelines or QA workflows.

- Privacy and compliance built in. Because the data is synthetic and de‑identified, you can share it freely without running afoul of HIPAA, GDPR, or other regulations.

Where you can use it

Think of Tonic Datasets as your on‑call data lab. We’re already working with AI research groups at Fortune 500 companies who are looking for bespoke datasets for training and evaluating AI systems. Here are a few common use cases:

- Training and fine‑tuning on data that doesn’t exist in the public domain like millions of insurance claims for an underwriting model, or realistic legal briefs to teach a contract analysis tool.

- Benchmarking and stress‑testing models with controlled scenarios, such as generating thousands of rare medical procedures or synthetic social‑network chats to evaluate an agent’s memory and safety.

- Specializing AI systems with vertical data, whether that’s doctor‑patient dialogues, loan applications, retail orders, or any other industry‑specific content.

- Creating new revenue streams by transforming your proprietary data into synthetic datasets you can license without ever revealing the originals.

How it comes together

Working with Tonic Datasets is straightforward. We start with a discovery call to understand your goals. Together, we scope the dataset’s structure and success criteria. We produce a small sample so you can verify quality and structure, then build the full set. If you need a data environment to test with, we’ll spin one up. The result is high‑fidelity synthetic data delivered quickly and safely, saving you months of data collection and compliance headaches.

Where we’re going next: MCP for Synthetic Data

We’re not standing still. Behind the scenes, we continue to build out the underlying toolsets and infrastructure that make our data generation possible. One of the most exciting projects in our R&D pipeline is currently in Alpha testing with select design partners: an MCP (Model Context Protocol) server for synthetic data generation. This reflects a broader market shift toward conversational, LLM‑driven workflows for technical tasks.

Instead of navigating bespoke applications, users are increasingly opting for chat experiences (i.e. Replit and Lovable) to handle complex or technical tasks such as coding. Our vision for the Tonic MCP server extends beyond code generation and into data generation where a user will be able to describe the data they need in natural language. An LLM will act as the orchestrator, asking clarifying questions, generating and executing code in a sandbox, storing the dataset, visualizing it, and iteratively refining it.

This new server will expose our synthetic data capabilities through a chat interface, combining declarative descriptions with imperative code when needed. The potential benefits are enormous: it could open synthetic data generation to a much wider audience, bridging the user’s domain expertise with Tonic.ai’s data generation expertise. It could become an entry‑level “Tonic for everyone” product and will evolve into a control plane for all of our offerings.

Our vision is to make high‑fidelity, privacy‑first data available to anyone building intelligent systems. Tonic Datasets and the forthcoming Tonic MCP server are key steps toward that future.

If you’re curious about what synthetic data could unlock for your models, we’d love to show you. If you’re interested in trying out the Alpha version of Tonic MCP server for synthetic data generation, we’d love to hear from you.

And as always, feel free to connect with our team to see sample datasets and tell us what you’re building. Whether you’re looking to develop smarter models, test agentic systems, or monetize your own data safely, Tonic.ai is here to help you move faster, safer, and farther than ever before.

.svg)

.svg)

.svg)