How to sanitize production data for use in testing

Production data reveals the edge cases your unit tests miss—the rare value combinations, the unexpected nulls, the referential integrity patterns that only surface at scale. But copying raw production data into testing environments exposes real customer information and violates privacy regulations like GDPR, HIPAA, and CCPA.

Data sanitization for software testing means transforming production data so your QA and development teams can run realistic tests without accessing real personal information. Proper sanitization preserves the statistical shape and relationships that make tests meaningful—same format constraints, same foreign key integrity, same distribution of edge cases—while removing identifiable details that create compliance risk.

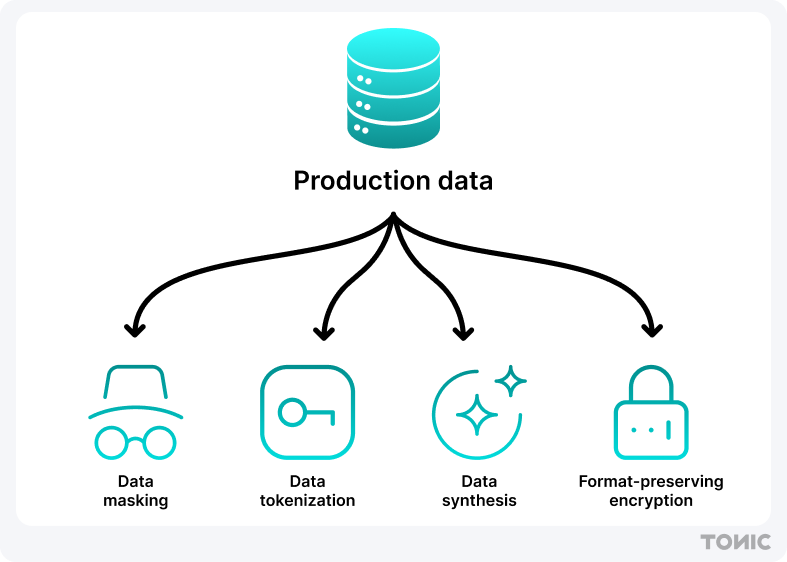

In this guide, you'll explore four core sanitization techniques: masking, tokenization, synthesis, and format-preserving encryption. You'll see when to use each method for testing workflows and how Tonic.ai’s platform helps support data sanitization for software testing while maintaining test data quality.

Why data sanitization for software testing matters

Every time you lift snapshots of production data into a test or staging environment, you risk leaking personal information and potentially violating regulations. Data sanitization for software testing lets you retain realistic scenarios while keeping real identifiers out of your QA and development workflows.

Without data sanitization, you run the risk that:

- Developers may accidentally commit datasets to public repositories that contain PII.

- Test environments leak through misconfigured access controls, exposing patient records or financial data.

- Model training will result in AI systems that memorize and regurgitate sensitive information.

GDPR

The General Data Protection Regulation (GDPR) requires organizations to implement “appropriate technical and organisational measures” to protect personal data. In practice, that includes removing or pseudonymizing direct identifiers before using production data in non-production environments.

CCPA/CPRA

California’s Consumer Privacy Act (CCPA) and its amendment, the California Privacy Rights Act (CPRA), grant consumers rights over their personal information. If your test data includes California residents’ records, you must remove or transform identifying fields to avoid unauthorized access or disclosure.

HIPAA

Under the Health Insurance Portability and Accountability Act (HIPAA), covered entities must de-identify Protected Health Information (PHI) by stripping 18 identifiers or obtaining an expert’s determination that the risk of re-identification is very small. Sanitizing patient data before testing helps ensure you won’t inadvertently expose PHI.

ISO 27040

ISO 27040 provides guidance on storage security, including media sanitization techniques like cryptographic erase. When you’re snapshotting databases to test environments, you should apply equivalent measures at the data level—such as format-preserving encryption (FPE)—so test workloads can’t recover original values.

ISO 27701

As an extension to ISO 27001, ISO 27701 focuses on privacy information management. It recommends pseudonymization as a control for data protection. Tokenization or masked substitutions in your test sets map closely to pseudonymization practices outlined in this standard.

Data sanitization techniques

The sanitization technique you choose directly impacts test coverage and release confidence. Redact too aggressively, and your test suite passes with incomplete data that fails in production. Mask inconsistently across related tables, and your integration tests can't reproduce the foreign key relationships that exist in live systems. Generate purely synthetic data without preserving production's statistical shape, and you miss the edge cases—the rare value combinations, the unexpected nulls, the outlier distributions—that cause real bugs.

Your test environments need data that behaves like production: same format constraints, same referential integrity, same edge-case frequency. But they also need ironclad privacy protection that passes GDPR, HIPAA, and internal security reviews. The four techniques below each solve different pieces of this puzzle, and most testing workflows require a combination—masking for realistic values, tokenization-style transformation for consistent IDs across tables, synthesis to supplement sparse production data, and format-preserving encryption for fields with strict validation rules.

Here's when to reach for data masking, tokenization, synthesis, or format-preserving encryption—and how to use the Tonic product suite to support each approach

Data masking

Data masking replaces real values with fictitious but realistic placeholders. You maintain schema and format—dates stay dates, credit cards still look like credit cards—so your tests run unmodified while privacy is preserved.

Tonic Structural masks structured and semi-structured data by replacing sensitive values like names, SSNs, or account numbers with realistic substitutes. For unstructured data (customer comments, support tickets), Tonic Textual detects entities—names, emails, IDs—and replaces them with synthetic values.

Data tokenization

Data tokenization sanitizes production data by identifying sensitive elements (like PII and PHI) and replacing them with unique, non-sensitive surrogate tokens. This process ensures that the original data is non-recoverable while retaining the dataset's referential integrity and statistical properties, which is vital for realistic software testing and high-quality AI model training.

Tonic Textual specializes in sanitizing unstructured data by using proprietary Named Entity Recognition (NER) models to automatically identify sensitive entities, treating them as tokens to be secured. It then replaces these sensitive entities with consistent, redacted values (e.g. NAME_395u0) or realistic synthetic values, effectively preserving the data's utility and context for AI applications like LLM fine-tuning while maintaining privacy.

Data synthesis

Synthetic data generation creates new records that mimic production schema, distributions, and correlations without directly referencing any real entries. This fully removes production records from your test set.

Tonic Fabricate generates structured datasets from scratch: it generates data based on your schema, retains foreign-key relationships, and produces as many rows as you need. Validate utility by comparing summary statistics—means, variances, correlation matrices—against the original.

Format-preserving encryption

Format-preserving encryption encrypts values so they look like the original format: a 16-digit credit card number encrypts to another valid 16-digit string under AES. Tests that validate formatting or length don’t break, yet real values remain irrecoverable without keys.

Implement FPE via Tonic Structural for fields such as account numbers or credit cards. Keep your encryption keys in secure vaults and rotate them according to your key management policies. After encryption, confirm that data integrity checks—indexes, constraints, length checks—still pass in your test schema.

Sanitize your production data with Tonic.ai

You’ve seen why data sanitization for software testing matters under GDPR, CCPA/CPRA, HIPAA, and ISO standards and how four core techniques fit into developer workflows. Tonic.ai offers a unified platform to apply these methods at scale, whether you’re masking structured tables with Tonic Structural, redacting unstructured text via Tonic Textual, or generating synthetic sets with Tonic Fabricate.

By integrating sanitization into your CI/CD pipelines and adding automated validation checks—distribution comparisons, nearest-neighbor audits, schema integrity tests—you’ll reduce privacy risk and keep your test environments realistic.

Ready to see it in action? Connect with our team and start protecting your production data today.

Chiara Colombi is the Director of Product Marketing at Tonic.ai. As one of the company's earliest employees, she has led its content strategy since day one, overseeing the development of all product-related content and virtual events. With two decades of experience in corporate communications, Chiara's career has consistently focused on content creation and product messaging. Fluent in multiple languages, she brings a global perspective to her work and specializes in translating complex technical concepts into clear and accessible information for her audience. Beyond her role at Tonic.ai, she is a published author of several children's books which have been recognized on Amazon Editors’ “Best of the Year” lists.

.svg)

.svg)

.svg)