When tackling test data management, developers often wonder whether data masking or synthetic data generation is the right approach for securing data. But this isn't an either/or question—the reality involves more nuance than a simple comparison suggests.

Both data synthesis and masking are broad terms encompassing various techniques. Synthesis can refer to generating data from scratch based on schemas or rules, but it can also involve creating data from existing datasets using statistical methods, which overlaps significantly with certain masking approaches. Some masking techniques are, in fact, forms of synthesis.

Rather than viewing data synthesis vs data masking as competing approaches, developers benefit most from understanding when to use each technique and how they work together to protect sensitive information while maintaining data utility.

What is synthetic data?

Synthetic test data is artificially generated information designed to reflect the structure and patterns of real-world datasets without containing actual sensitive information.

You can create synthetic test data through a few primary approaches:

- Rule-based data generation, using predefined schemas and rules

- Model-based data generation, using statistical methods or AI

- Transformative data generation, applying techniques to existing data to transform it into new or altered synthetic values

This flexibility and variety of available approaches makes synthetic data particularly valuable when production data is unavailable, poses privacy risks, or lacks sufficient volume for comprehensive testing.

Benefits:

- Protects sensitive information by avoiding the use of real PII

- Enables development when real data doesn’t yet exist

- Supports edge case and rare scenario testing

What is test data masking?

Test data masking falls under the umbrella of transformative data generation as it transforms existing production data by replacing, encrypting, or obscuring sensitive values while preserving the data's structure and relationships.

Common masking techniques include redaction, tokenization, and substitution. Masked data maintains the logical relationships and referential integrity of your original datasets, making it ideal for testing scenarios that require realistic data patterns and database constraints.

Benefits:

- Maintains structural fidelity across datasets

- Meets regulatory standards for anonymized data

- Works well with legacy databases and compliance-heavy environments

Synthetic data vs data masking: differences

The distinction between data synthesis vs data masking isn't always clear-cut, which creates real-world confusion when selecting tools and designing data pipelines. The key is recognizing that your choice often depends on whether you need to closely mirror an existing database’s relationships and underlying business logic (favor masking), unlimited scalability (favor synthesis from scratch), or statistical realism without direct data lineage (favor synthesis from existing data)—or a combination of the three.

Here's how different approaches compare:

The middle category—data synthesis from existing data—is where synthesis and masking overlap most significantly. Techniques like differential privacy, k-anonymization, and statistical data modeling blur the traditional boundaries between these approaches.

Accelerate product innovation and AI model training with compliant, realistic test data.

Use cases for data synthesis vs data masking

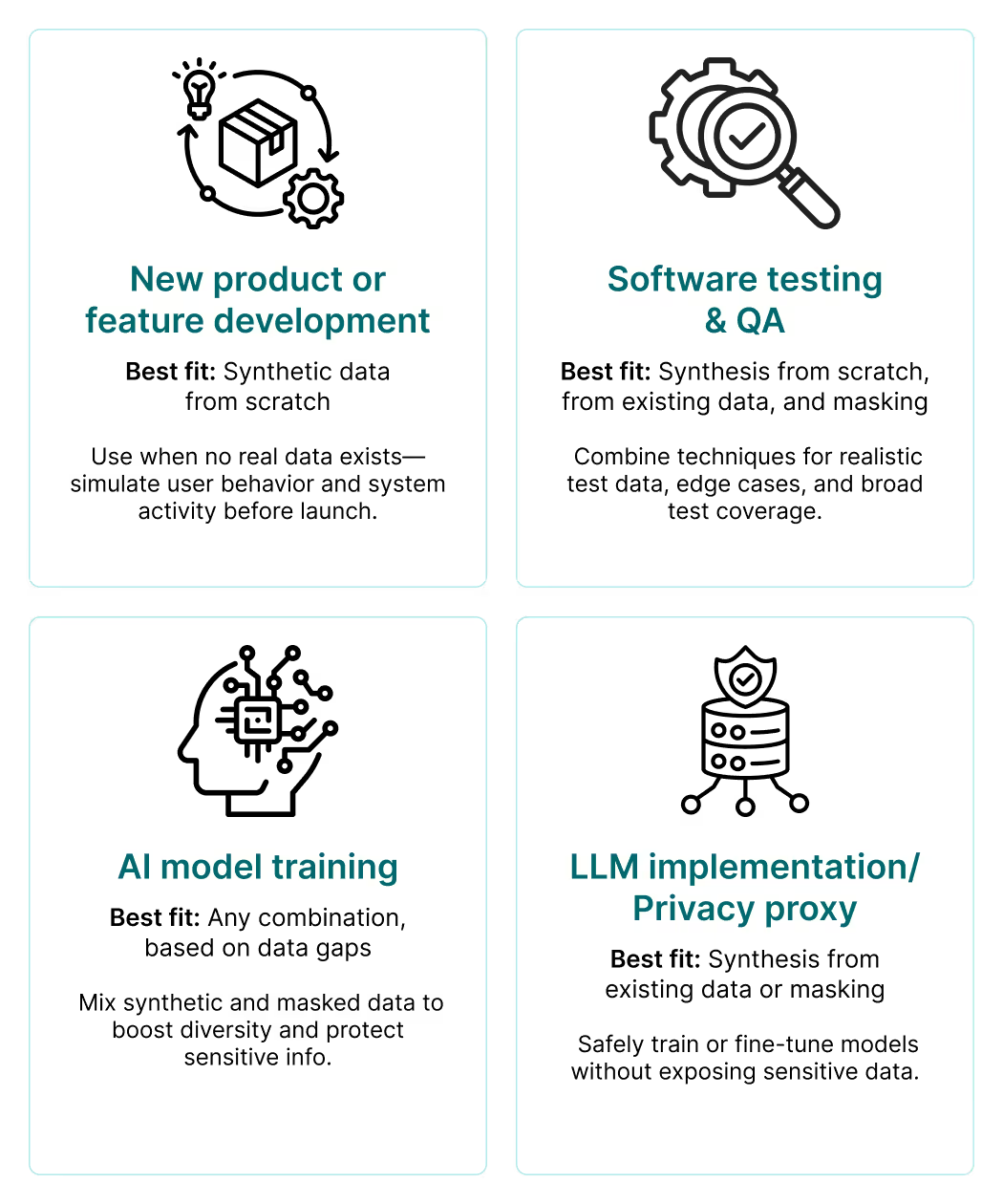

Different scenarios call for different approaches to anonymized data and synthetic data generation.

New product or feature development

Best fit: Synthetic data from scratch

Synthetic test data from scratch, like that offered by Tonic Fabricate, is the ideal solution when no production data exists. You can spin up custom datasets to simulate user behaviors, transaction patterns, and system loads before launch, enabling comprehensive testing and performance validation during early development phases.

Software testing and QA

Best fit: Synthesis from scratch, from existing data, + data masking

Both synthesis from existing data and data masking excel in this scenario and are the key capabilities offered by Tonic Structural. Masking preserves production data relationships for realistic testing, while data synthesis from scratch can supplement with edge cases and scenarios not present in your current dataset, improving overall test coverage.

AI model training

Best fit: Any combination, based on gaps and risk

For machine learning, you need data diversity and volume—any combination of masking and synthesis techniques, like those offered by Tonic Textual, works depending on your data gaps and privacy requirements. Use masked data for baseline patterns, synthetic data to address underrepresented scenarios, and generated data to augment training sets without exposing sensitive information.

LLM implementation/privacy proxy

Best fit: Synthesis from existing data or masking

Synthesis from existing data or masking both enable secure LLM integration, a key use case for Tonic Textual. These approaches let you leverage your data's value for prompt engineering and model fine-tuning while protecting sensitive information from exposure to external AI services.

Sales demos

Best fit: Any technique that balances realism with privacy

Multiple techniques work well for creating compelling demonstrations. Mask production data to maintain credibility while using synthetic data from scratch to showcase features and scenarios that highlight your product's capabilities without privacy concerns.

Benefits of combining data synthesis & data masking

Data synthesis and masking work better together than in isolation. When you combine them, you get the best of both worlds:

- Use data masking to anonymize production datasets while keeping your data model intact.

- Use synthetic data generation to achieve heightened realism, fill in gaps, model rare conditions, or build entire datasets where none exist.

- Layer both techniques to improve test coverage, reduce manual effort, and accelerate delivery.

For example, when testing a financial application, mask existing transaction data to maintain realistic user behaviors and account relationships. Then synthesize additional data to test international payments, unusual refund scenarios, and edge cases that rarely occur in production. This hybrid approach provides comprehensive test coverage while maintaining privacy and compliance.

However, it's important to understand that basic, manual masking approaches carry re-identification risks when data is combined with external sources or when datasets contain statistical outliers that could expose individual identities. To avoid these risks, a robust masking solution is essential.

The Tonic.ai product suite offers comprehensive solutions for data synthesis and data masking, including Tonic Fabricate for rule-based data generation from scratch, Tonic Structural for transformative structured data generation based on existing production data, and Tonic Textual for unstructured data synthesis based on existing free-text, audio, and video files.

The key insight is to treat data synthesis and masking as complementary tools rather than competing alternatives. The best test data management strategies use both techniques strategically, applying the right approach based on specific privacy requirements, data availability, and testing objectives.

Want to see how synthesis and masking work together in practice? Connect with our team to explore developer-first approaches to secure test data management.

.svg)

.svg)