Data privacy laws—GDPR, HIPAA, CPRA, and others—while necessary, make it difficult to train machine learning models. You need representative datasets to train accurate models, but if those datasets expose sensitive information, you’re putting users, systems, and your company at risk. Secure data generation gives you a way to move fast without compromising privacy. It lets you iterate, test, and deploy models with safety and compliance built in from the start.

It also helps your team work together. Product, engineering, security, and legal can all align around a shared dataset that’s safe by design. It might require more effort up front, but you’ll spend less time chasing red tape and more time building.

What is secure data generation?

Secure data generation is a method of creating datasets for machine learning that preserves utility while reducing or eliminating exposure to sensitive, identifying, or regulated information. It prevents problematic data from entering your pipeline from the start, improving compliance and data safety.

Synthetic data vs. de-identified data

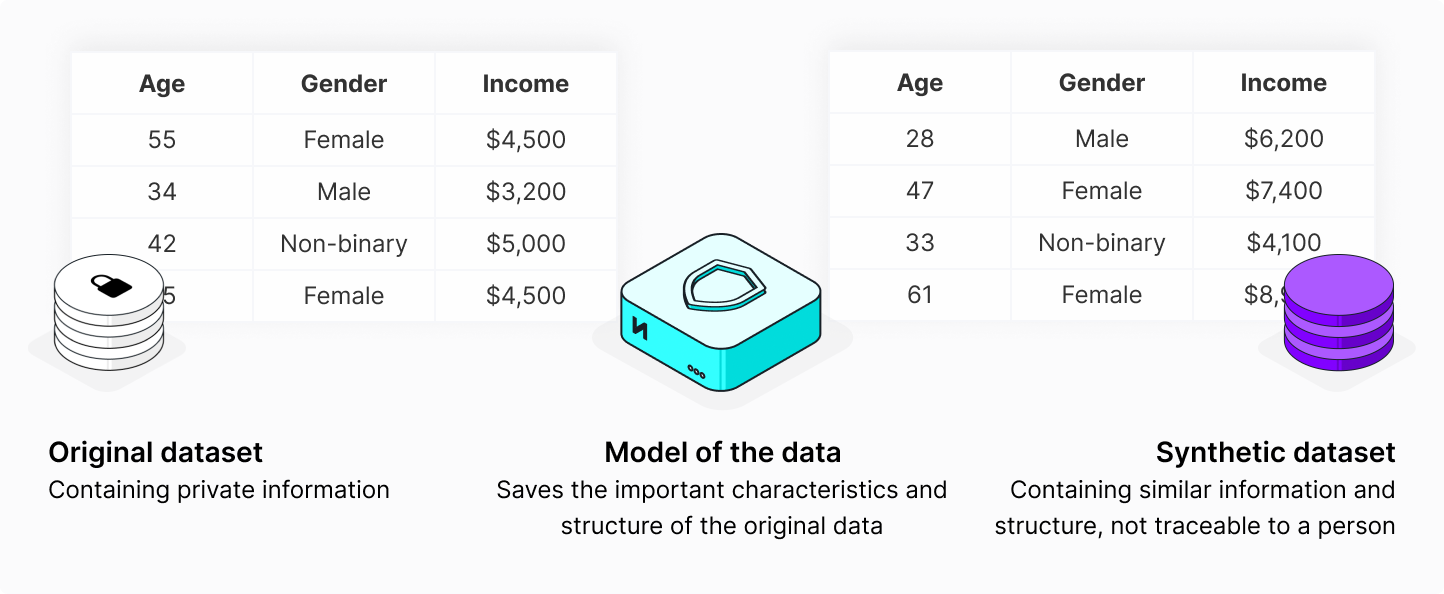

Synthetic data and de-identified data both protect privacy, but they solve different problems.

- De-identified data removes or masks personally identifiable information (PII) from real datasets. It’s useful when you need to preserve original formatting and structure.

- Synthetic data is created using statistical models or algorithms that simulate real-world data. It doesn’t include any original user information, but it can be modeled on and still reflects the same structure and behavior of real-world data.

These methods can be used together. For example, you might start by de-identifying a customer support database to remove direct identifiers like names or account numbers. Then, you could use that sanitized dataset to generate synthetic examples that preserve conversational patterns and issue types without retaining any trace of the original records.

This layered approach improves both realism and privacy assurance.

Use cases for secure data generation

In practice, most delays in data-driven development don’t come from tooling—they come from access. Waiting for approvals, masking scripts, or legal reviews slows teams down. The use cases below share a common pattern: they all involve environments where using real production data would introduce unnecessary risk, friction, or delay. By generating secure data tailored to these situations, you reduce exposure while accelerating delivery across your ML and software pipelines.

- Testing and QA: Replace production data with synthetic alternatives in test environments. This prevents accidental exposure and makes test cases repeatable. You also avoid delays caused by waiting for approval to access production data.

- Data sharing & SaaS demos: Share data with external teams or demo your product without revealing real customer records. This keeps your legal and security teams happy while giving partnerships and sales the assets they need.

- AI model training: Train models on datasets that mimic your real-world data without the risk of exposing user information. This is especially useful for fine-tuning on proprietary formats or custom user flows.

- Edge-case simulation: Create rare or risky scenarios, like fraud or system outages, and use them to improve your model’s performance. Synthetic data lets you generate thousands of these edge cases without needing to see them in production.

- LLM privacy proxy: Use a privacy layer that pre-processes inputs before they reach a large language model. This helps you prevent leaks before they happen, especially in applications that handle sensitive queries.

Unblock AI innovation with synthetic data that safeguards data privacy while preserving your data's context and relationships.

How to generate secure data

Secure data generation touches every part of your ML lifecycle—from how you access raw data to how you train, test, and audit your models. The steps below will help you design a secure data generation process that’s scalable, auditable, and tailored to your machine learning workflows.

Step 1: Audit your training data sources

Audit how sensitive data makes its way into your training datasets. Focus on which systems or exports developers use to create these datasets—production databases, user support logs, analytics dumps, etc. Even when data is pulled manually or compiled offline, regulated or identifiable information often slips in unnoticed.

By tracing the upstream sources that contribute to your training data, you can spot where privacy risks originate and apply the right safeguards before model development begins.

Or, use the Tonic suite of synthetic data solutions which offer built-in sensitive data detection and classification to help you map where PII or regulated data appears within your datasets.

Step 2: Select the right generation strategy

Pick a data generation method that matches your use case:

- Use rule-based synthesis to tailor data generation from scratch to fulfill certain requirements, like ensuring edge case coverage.

- Use transformative synthesis (e.g., masking, tokenization) when structure and relationships across your existing data need to stay intact.

- Use model-based synthesis when you need high realism for analytics or machine learning.

Read Tonic.ai’s How to Generate Synthetic Data: A Comprehensive Guide to dig deeper into these methods.

Hybrid tools can support multiple strategies and adapt to different teams. Tonic.ai, for example, offers multiple synthesis strategies by way of its products:

- Tonic Fabricate is built for generating synthetic data from scratch;

- Tonic Structural handles transformations and synthesis based on existing data;

- Tonic Textual specializes in free‑text redaction and synthesis for AI model training.

Step 3: Validate privacy and utility

Check whether your synthetic data is both safe and useful:

- Use testing methods to ensure synthetic records don’t match real ones too closely.

- Compare data structure, behavior, and distributions to your source dataset.

- Train a model and evaluate whether the performance matches expectations.

If the data is too close to real users, it’s a privacy risk. If it’s too generic, it won’t help your model learn. Find the balance—and automate validation where you can.

Step 4: Integrate into your ML pipeline

Make secure data generation part of your default workflow. Put it upstream of development, testing, or modeling and set it up to run automatically so that every team uses safe data without needing to ask. Use webhooks or APIs to refresh and validate datasets on demand. Add metadata so teams know what data they’re working with.

This whole process can be made easier with the Tonic product suite’s APIs and SDKs that let you script generation tasks, version them, and integrate them into your workflows.

Step 5: Document and monitor

Log everything: how you generated the data, how you validated it, and how you used it. Track what version went into which model and who had access. That way, you can defend your choices in an audit—or just retrace your steps when something breaks.

Tools that track data lineage make this easier. Tonic.ai provides auditing features to log and monitor data transformations and ensure adherence to data governance policies.

Making compliant datasets

When you generate secure datasets early, you can ship faster and reduce your reliance on production data. You also stay ahead of regulatory reviews and compliance issues.

Platforms like those offered by Tonic.ai make it easy to automate secure data generation, so you can focus on building smarter, safer AI models.

Book a demo to see how you can start training your models safely today.

.svg)

.svg)