Data masking means to protect sensitive data by replacing it with a non-sensitive substitute.

Snowflake is a cloud-based data warehouse platform that allows organizations to store, manage, and analyze large amounts of data.

Your Snowflake data might include highly sensitive values that you must mask before other teams can safely use the data to support development and testing.

So how do you do that?

In this guide, we'll compare dynamic data masking in Snowflake to Tonic Structural's static data masking, and explain why static data masking is the better option. We'll also provide a step-by-step overview of the Structural masking workflow.

Benefits of data masking

To start, let's talk about why you need to mask data in the first place.

At a high level, data masking allows you to produce realistic data for faster and higher-quality development, while ensuring that you do not leak any sensitive information.

Types of data masking

Data masking might be either dynamic or static.

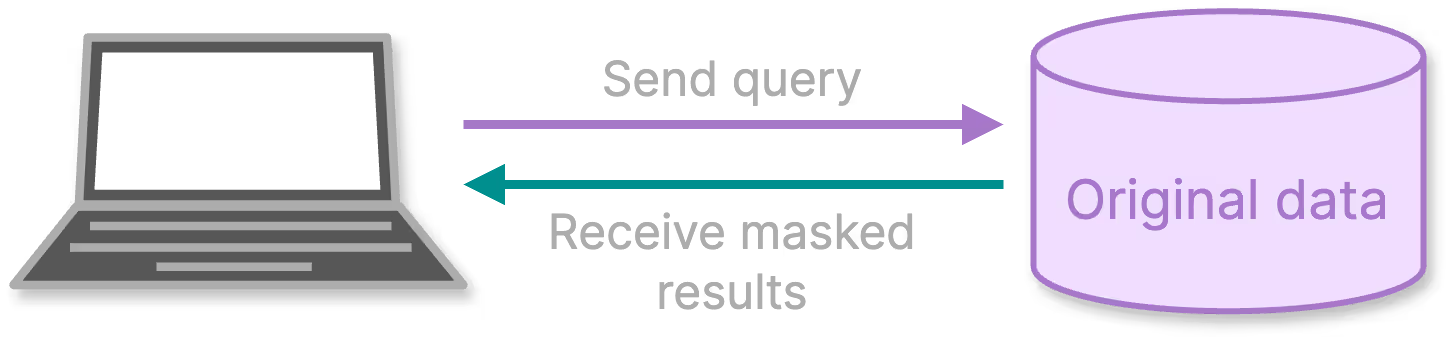

Dynamic data masking

Dynamic data masking happens in real time when a user issues a query. The returned read-only results are masked based on their user role and the query specifics. The original data remains untouched.

On the plus side, the masking is in real time and does not require additional storage and processing.

However, it requires a complex configuration of access and masking rules. And because it only masks query results, it also isn't really useful for development and testing, which require editable data.

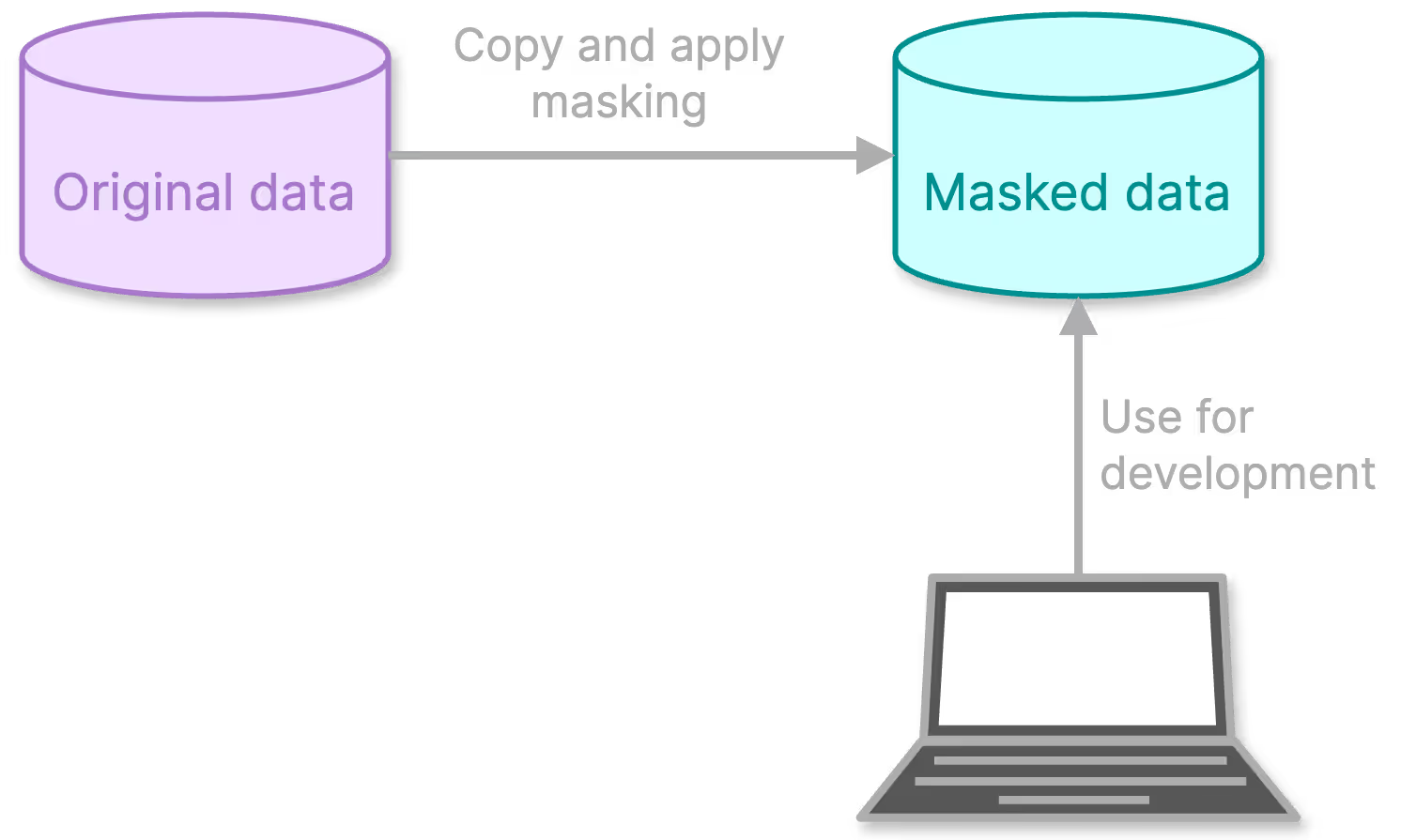

Static data masking

Static data masking permanently modifies the original data—usually a copy of the original data—to create a new dataset.

In the new dataset, all of the sensitive values are masked.

Static data masking does require some additional storage and processing.

But once you complete the masking process, you have a secure set of data that you can provide to your development teams without having to worry about privacy and compliance.

And your developers have realistic, editable data that they can use as they iterate through rounds of development and testing, to quickly produce high-quality software products.

Options for data masking in Snowflake

Snowflake dynamic data masking

Snowflake comes with a built-in dynamic data masking capability.

However, getting masked query results is not that useful for development scenarios. Developers need to run tests that both read and update the data—create a widget, edit a widget, delete a widget.

And as with any masking tool that is built into a database platform, it is specific to that platform. So if you also have databases on other platforms, you would need to learn and maintain a different data masking solution for each one.

Additionally, database-native data masking features are often more limited. They might have less complex masking techniques, which results in less realistic data. Or they might not have more advanced capabilities, such as database subsetting or custom sensitivity rules to detect sensitive data types that are specific to your organization.

Tonic Structural static data masking

Tonic Structural is specifically built to produce realistic masked data.

Because of this, it provides several robust features—such as consistency, linking, and subsetting—that enhance the realism and repeatability of the resulting data, and allow you to produce different sizes of data to accommodate different use cases.

Structural provides native data connectors to support a wide range of database platforms. You can mask your Snowflake data, your Oracle data, your PostgreSQL data, and text files in S3 buckets from a single Structural instance, while you maintain consistency in how your data is transformed across those database types.

Security is paramount in Structural, not just for making sure that data is masked, but for controlling who can configure the masking and view the original and masked data. The platform offers built-in security features such as single sign-on (SSO), role-based access, and audit trails.

Accelerate your release cycles and reduce bugs in production by automating realistic test data generation.

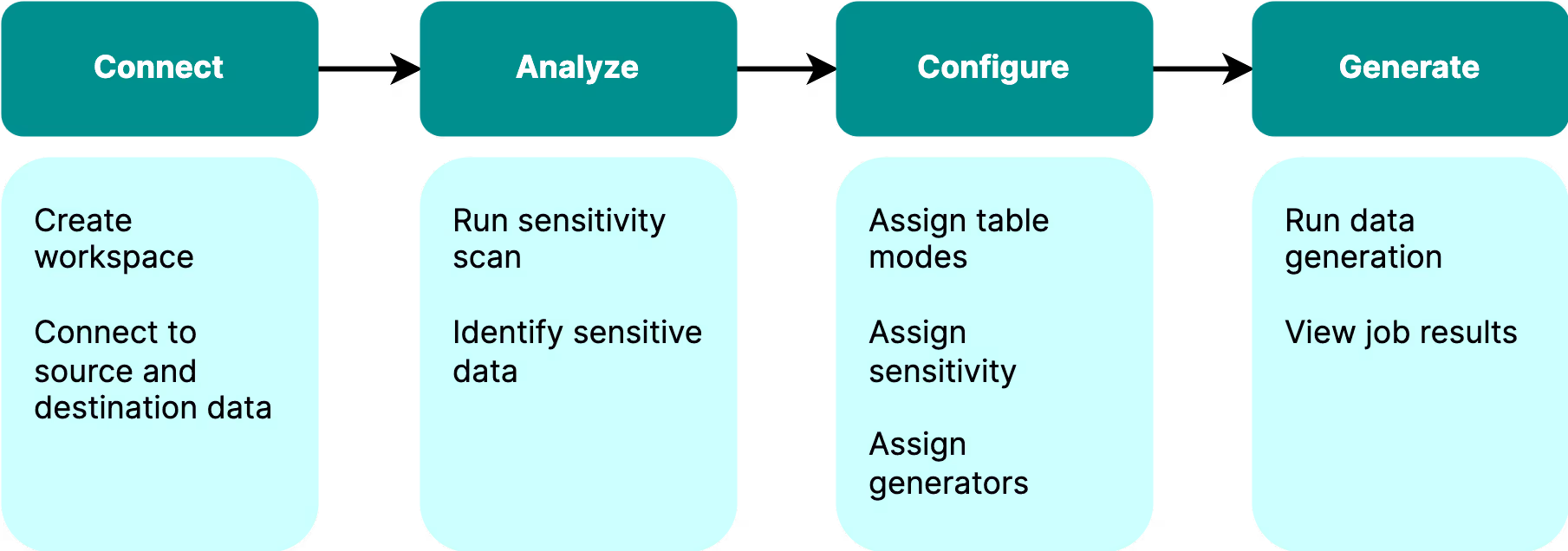

How to use Tonic Structural to mask Snowflake data

How do you use Structural for data masking in Snowflake? Here's a quick overview of the Structural workflow.

Step 1: Connect to your data in Snowflake

To start, you create a Structural workspace. For Snowflake, Structural allows you to process files either through AWS or through Azure.

In the workspace, you configure the connection to the source data to de-identify, then provide the connection to where Structural will write the masked destination data.

Step 2: Identify sensitive values

For the new workspace, Structural automatically runs a sensitivity scan of the source data to identify sensitive values. You can also run scans manually to catch sensitive values in newer source data.

Based on the scan, Structural flags columns that contain sensitive values, and identifies the type of value.

The sensitivity type might be a built-in type that Structural automatically identifies, based on the column name and content. It can also be a custom sensitivity type that you configure. Custom sensitivity rules allow Structural to identify additional sensitive values that might be specific to your organization or industry.

Structural flags the detected columns so that you can easily identify them.

Step 3: Configure generators

Now that you know the values that you have to protect, you can assign Structural generators to those columns. A Structural generator modifies the source value so that it is de-identified. For example, it might scramble the characters or replace the value with a realistic substitute—replace the first name John with Michael, or replace the city Houston with the city Milwaukee.

The sensitivity scan identifies a recommended generator configuration for each column that it flags as sensitive, based on the sensitivity type. For example, for a first name, the scan recommends the Name generator configured to produce a first name.

From Privacy Hub, which provides a high-level overview of the data protection status, you can quickly apply all of these recommendations with a single click.

You can also manually assign generators and update the generator configuration to meet your needs.

Step 4: Optionally, configure database subsetting or table filtering

Depending on your use case, and the size of the original data, you might want to produce a smaller subset of de-identified data.

Structural subsetting allows you to produce a smaller dataset that maintains referential integrity. For example, you might configure a subset to include all sales records from California, along with the related records from other tables.

If referential integrity is not as important, then you can instead configure table filters. Each table filter is a simple WHERE clause that identifies the records to mask and then to include in the output data.

Step 5: Run data generation to hydrate your destination database

Finally, after you complete the configuration, you run data generation.

The Structural data generation process applies the configuration, and writes the resulting de-identified data to the destination database.

Recap: How to best protect sensitive Snowflake data

Data masking replaces sensitive values with non-sensitive ones.

Dynamic data masking, which is what Snowflake provides out-of-the-box, returns masked results in response to database queries.

A more versatile and flexible option is static data masking, such as that provided by Tonic Structural. Static data masking allows you to produce realistic, secure, and editable versions of your original sensitive data that is stored in Snowflake.

Structural automatically identifies sensitive values in your data, and allows you to immediately apply masking to those values. You can then generate right-sized datasets for development teams to use for accelerated development and testing.

To learn more about Structural data masking for Snowflake, connect with our team today.

.svg)

.svg)