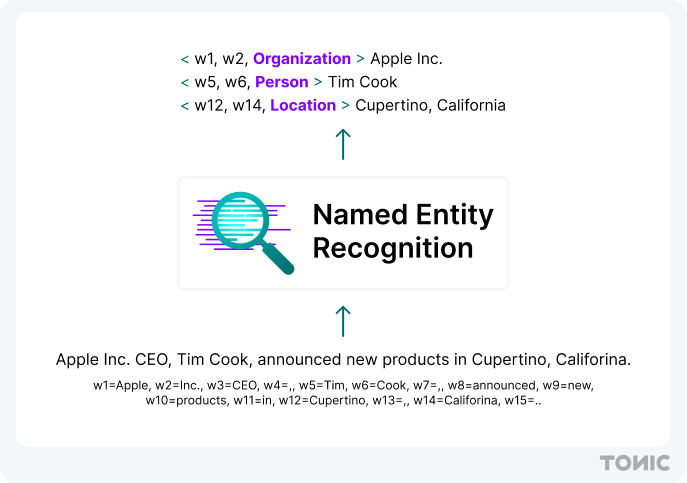

Unstructured text holds valuable insights but often hides sensitive personal information that triggers privacy concerns. Named entity recognition (NER) automates the identification and classification of names, addresses, IDs, and other PII in text, so you can flag, redact, or synthesize realistic replacements at scale. By embedding NER into your data pipelines, you reduce manual effort, speed up audits, and support compliance-aligned workflows without sacrificing downstream utility.

NER techniques

Across projects, you’ll choose between rule-based, machine learning, or hybrid named entity recognition approaches based on accuracy, customization, and maintenance trade-offs. Each method has a role in automating PII detection.

Rule-based approaches rely on handcrafted patterns, regular expressions, and dictionaries to match entity types—Social Security number formats or known VIP names, for example. They're easy to inspect and modify, but might miss edge cases and require ongoing maintenance as text sources evolve.

The trade-off: high precision on known patterns but poor recall on variants you haven't explicitly coded for. In Tonic Textual you can augment built-in detection with custom rules to capture organization-specific patterns.

Machine learning approaches use statistical or deep learning models trained on annotated corpora to generalize beyond exact pattern matches. They adapt to varied contexts and achieve higher recall, but need labeled examples and infrastructure for retraining.

The trade-off: better coverage across diverse texts but higher false positive rates that require threshold tuning. Tonic Textual leverages proprietary NER models trained across 50+ entity types to balance precision and coverage. Its newly launched custom entity types capability allows users to train custom detection models within Textual to identify sensitive information unique to their organization.

Hybrid approaches combine rules and ML to guide predictions—rules filter obvious non-entities or enforce strict formats, while ML handles ambiguous contexts. This reduces false positives from pure ML and mitigates rule complexity. Tonic Textual offers unlimited flexibility to design your own entities and self-serve detection refinement.

Who benefits from NER-driven compliance automation?

Whether you’re responsible for data protection or building ML pipelines, automating entity detection saves time and reduces errors. Manual PII review doesn't scale—a data engineer reviewing 10,000 support tickets at 30 seconds each burns over 80 hours, and still misses edge cases that regex can't catch.

NER-driven automation processes the same volume in minutes with consistent accuracy. Teams that commonly rely on NER-driven workflows include:

- Data protection officers, privacy engineers, and legal teams who must demonstrate defensible controls

- Platform, QA, and ML engineering teams that process large volumes of free text or logs

- Outsourcing and vendor teams that need to share unstructured datasets without exposing PII

- Security and incident response teams that need fast, accurate PII discovery across repositories

Named entity recognition also enables practical workflows that manual processes can't support: real-time log sanitization before third-party monitoring tools ingest data, automated redaction of customer support transcripts for offshore QA teams, and safe dataset generation for training customer-facing chatbots.

Ensure compliance in your AI initiatives and build features faster by safely leveraging your free-text data.

Using NER for compliance

You can use named entity recognition to satisfy data privacy compliance requirements like those in the GDPR and the CCPA. In practice, you run a Tonic Textual workflow to detect sensitive entities, redact or tokenize them, and optionally synthesize realistic replacements. The following steps outline a typical PII sanitization pipeline for unstructured text.

Step 1: Automate sensitive data detection

Connect Tonic Textual to your data sources—support tickets, chat logs, documents—and let its proprietary NER models tag candidate PII spans. You'll get entity labels (PERSON, EMAIL, ID) along with confidence scores.

Confidence scores typically range from 0.0 to 1.0; entities scoring above 0.85 are usually reliable, while scores between 0.5 and 0.85 warrant review. Start with a conservative threshold (0.9) and adjust down as you validate false negative rates against your sample batch. Review a sample batch to spot any missed patterns or false positives, then adjust detection thresholds or entity definitions before scaling to full datasets.

Step 2: Customize entity configuration

Every organization has unique identifiers—employee codes, proprietary IDs, or internal project names. You can use Textual’s UI-based workflow to create custom detection models based on your own data or define regular expressions in Tonic Textual to ensure those entities are detected. This step lets you capture domain-specific PII that general models might overlook, giving you end-to-end coverage.

Step 3. Redaction via tokenization

Once entities are detected, apply consistent tokenization: Replace each sensitive span with a token (for example, `[NAME_1]`, `[EMAIL_3]`) while preserving the original text structure. Tokenization helps maintain referential integrity—every occurrence of “Alice Smith” becomes the same token—so downstream workflows can still distinguish unique entities without revealing real data.

Step 4. Context-aware data synthesis

To maintain realistic patterns in your text, you may choose to generate substitutes instead of blanking out everything. Tonic Textual can replace first names, dates, or addresses with synthetic but plausible values drawn from configurable distributions. You pick per-entity actions—for instance, synthesize PERSON and redact ORGANIZATION—and preserve readability and statistical shape for tasks like model training.

Step 5. Optional expert determination

For HIPAA environments, you may need a formal expert determination to certify minimal re-identification risk. You export your redacted/synthesized samples and engage a qualified third-party reviewer. In practice, this may also include a risk analysis report, but the core NER workflow feeds directly into that assessment.

Step 6. Data generation and export

After transformation, export sanitized text for analytics or model training. At this stage, run your validation checks:

- Utility checks: Compare token distributions, check label quality, and confirm expected model performance doesn’t spike (which could indicate leftover PII or training data leakage).

- Privacy checks: Run nearest-neighbor or proximity tests to ensure no synthetic sample is too close to real records, and confirm all direct identifiers are removed.

- Reproducibility: Log your Tonic Textual version, configuration settings, and random seeds so you can regenerate or audit results later.

How Tonic.ai enables NER-driven compliance automation

Tonic Textual brings together detection, transformation, and audit capabilities in a single service you can embed in ML and AI workflows or run on demand.

Detection and enrichment

Tonic Textual is built on proprietary named entity recognition models that tags and surfaces sensitive data spans across unstructured text. You can supplement built-in entity types with custom entities or regex rules, ensuring both common and organization-specific identifiers are caught before they reach downstream systems.

Safe transformation

Once tagged, you choose per-entity transformation modes: consistent tokenization or context-aware synthesis. This supports compliance-aligned workflows and reduces risk by keeping real personal information out of training data, while preserving the statistical shape and readability needed for model training or user testing.

Audit and reporting

Tonic Textual captures detailed usage logs—records processed, entities detected, transformations applied, and user actions. You can export these audit reports to satisfy compliance requests and demonstrate how sensitive data was handled, providing a defensible trail for auditors or regulators.

Try Tonic.ai for sensitive entity detection in unstructured data today

Named entity recognition automates the most time-consuming parts of unstructured data compliance: detection, redaction, synthesis, and auditing. Embed Tonic Textual into your pipeline to reduce manual review, support compliance-aligned workflows, and keep real personal information out of your training data.

Ready to see Tonic Textual in action? Book a demo today and discover how easy PII protection at scale can be.

.svg)

.svg)